Tag: Llama.cpp

-

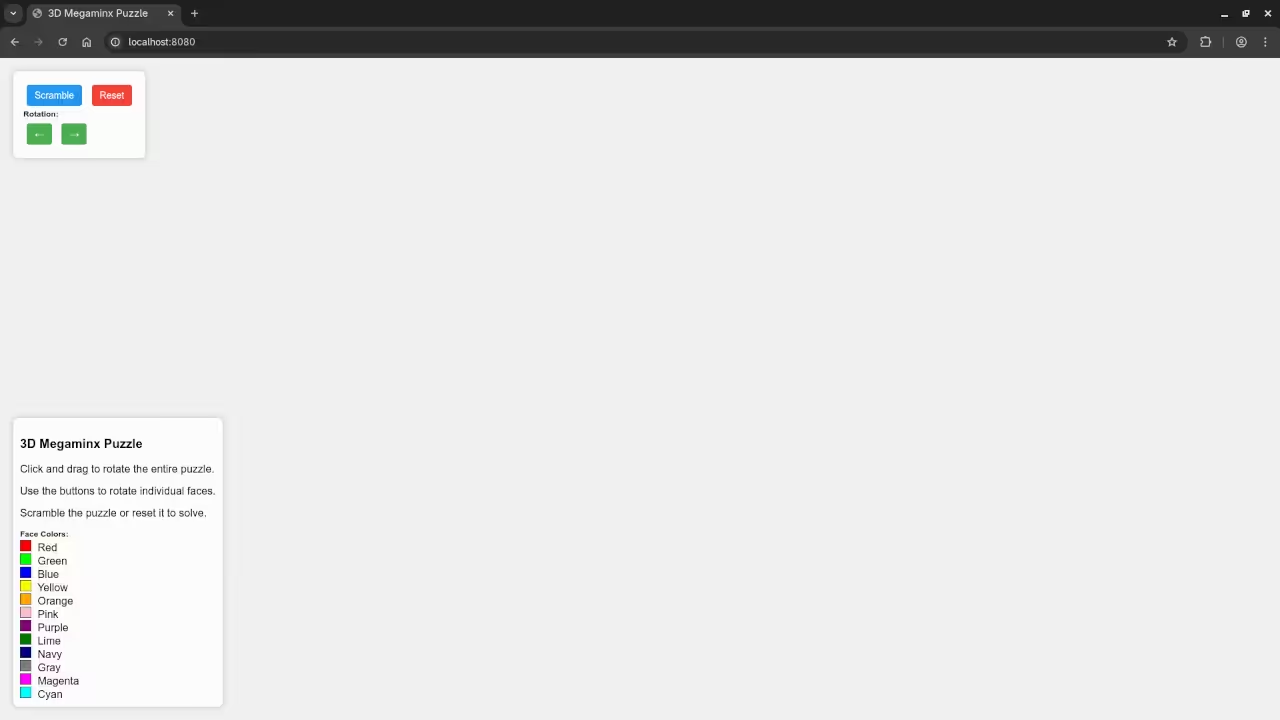

Generating a Megaminx with Qwen3 and HTML5

Introduction to Megaminx Generation Creating a Megaminx with HTML5 code is fun.

Written by

-

Understanding Local AI Architecture GGUF And Quantization

Introduction Local AI development is becoming very popular for Linux users.

Written by

-

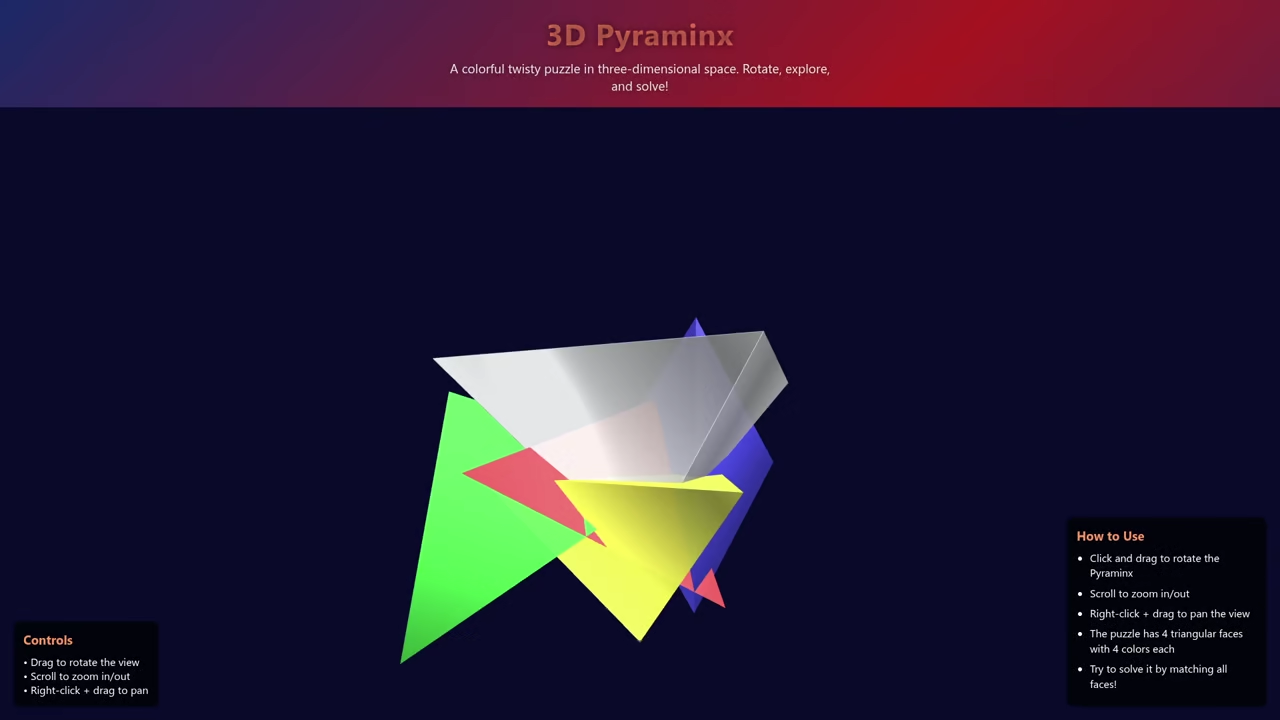

Coding the Pyraminx with Local AI on Fedora

Introduction This tutorial builds a Pyraminx puzzle on Fedora 43.

Written by

-

AI vs. Bottlenecks: Modernizing an HTML5 Rubik’s Cube for Performance

Enhancing Your HTML5 Rubik’s Cube Modern Tweaks for Better Performance and Mobile Experience In a previous article, HTML5 WebGL: Building an Interactive 3D Puzzle, we explored how to build an interactive 3D puzzle using HTML5 and WebGL with the help of Qwen3-Coder-30B-A3B-Instruct-UD-Q4_K_XL.gguf running on llama.cpp.

Written by

-

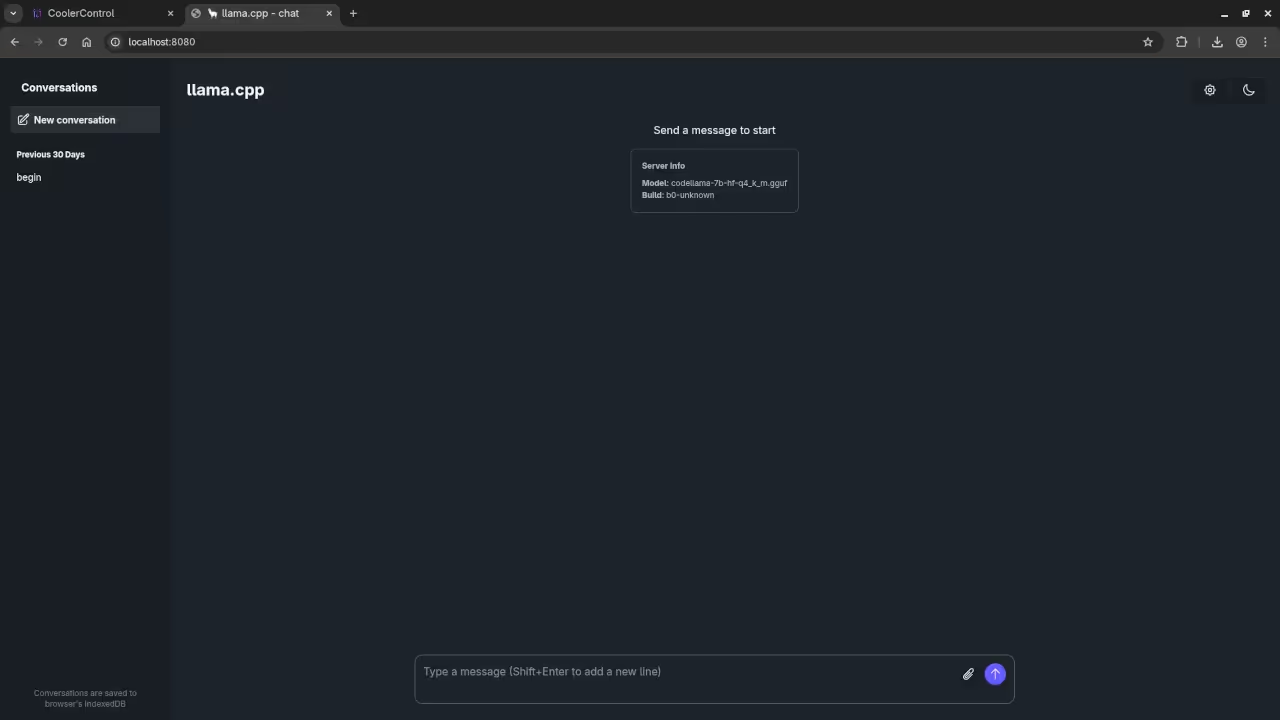

Review Generative AI codellama-7b-hf-q4_k_m.gguf Model

Steps to Configure Llama.cpp WebUI with Codellama 7B on Fedora 43 In this tutorial, we will go through the steps to configure the Llama.cpp WebUI with Codellama 7B running on a Linux system with an AMD Instinct Mi60 32GB HBM2 GPU.

Written by

-

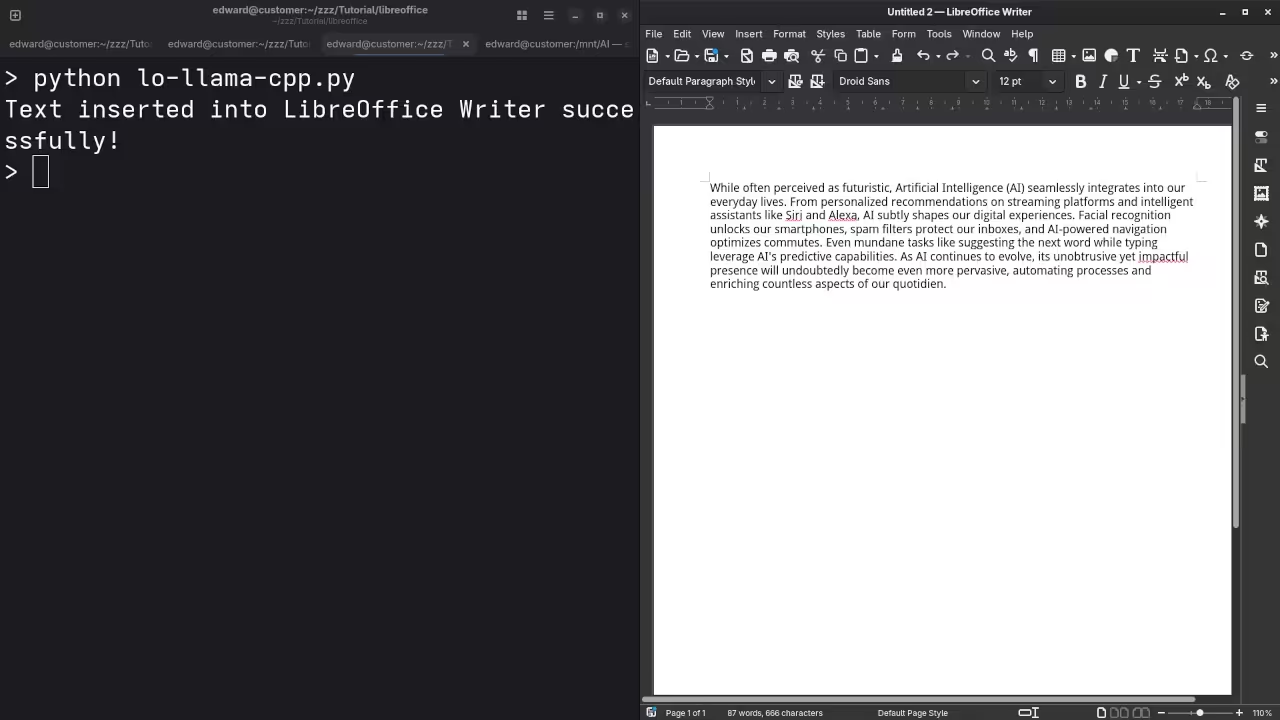

LibreOffice Writer Llama.cpp Integration Using AMD Instinct MI60 GPU

Getting Started with LibreOffice + Llama.cpp Integration on Linux with AMD Instinct Mi60 GPU If you are a Linux user looking to leverage AI capabilities with LibreOffice, this guide will show you how to integrate Llama.cpp into LibreOffice Writer using your AMD Instinct Mi60 32GB HBM2 GPU.

Written by

-

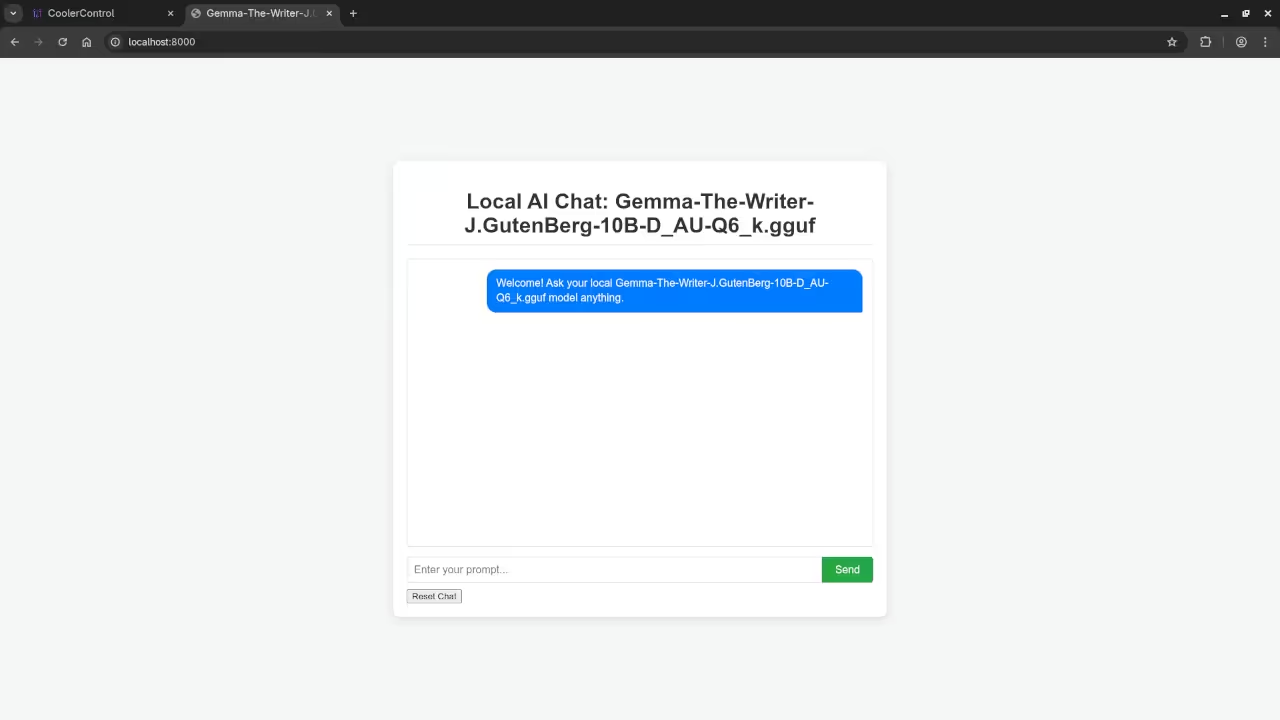

Review Generative AI Gemma-The-Writer-J.GutenBerg-10B-D_AU-Q6_k Model

Setting Up Gemma-The-Writer-J.GutenBerg-10B-D_AU-Q6_k LLM on Your Custom Web UI In today’s post, we’ll dive into how to set up the Gemma-The-Writer-J.GutenBerg-10B-D_AU-Q6_k LLM (Large Language Model) on a custom web user interface (UI) using the Llama.cpp backend.

Written by