Training GPT-Neo 125M in a Podman Compose Container Using Open Source Tools and Data

If you’re looking to get started with large language models (LLMs) like GPT-Neo 125M, you’re in the right place. In this tutorial, we’ll explore how to train the open source GPT-Neo 125M model from Hugging Face inside a containerized Podman Compose environment.

This setup is perfect for developers who prefer open source tools and want a simple, reproducible training workflow — even on a modest machine.

What Is GPT-Neo 125M?

GPT-Neo 125M is an open source transformer-based language model created by EleutherAI. It’s a great starting point for fine-tuning with small datasets or learning the basics of language model training.

Why Use Podman Compose?

Podman Compose offers a container-based environment similar to Docker Compose — but with a focus on being daemonless and rootless. It’s ideal for developers working on Linux who want an open source alternative to Docker.

With Podman Compose, you can:

- Reproducibly build and run training environments

- Mount volumes for data, model caching, and output

- Avoid polluting the host system

Installing GPT-Neo 125M in Podman Compose

1. Project Structure

gpt-neo-podman-compose/

│—— data/ # Your custom dataset (.jsonl)

│—— output/ # Model outputs (saved model)

│—— cache/ # Model & pip cache

│—— Dockerfile

│—— podman-compose.yml

│—— requirements.txt

│—— train.py

2. Training Script train.py

from datasets import load_dataset

from transformers import AutoTokenizer, AutoModelForCausalLM, Trainer, TrainingArguments

import torch

# Load dataset

def format_example(example):

return {"text": f"### Question: {example['prompt']}\n### Answer: {example['response']}"}

dataset = load_dataset("json", data_files="data/custom_dataset.jsonl")

dataset = dataset.map(format_example)

dataset = dataset["train"].train_test_split(test_size=0.1)

dataset = dataset.remove_columns(["prompt", "response"])

# Tokenizer & Model

model_name = "EleutherAI/gpt-neo-125M" # Smollm 135M is not public, use similar

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

def tokenize(example):

return tokenizer(example["text"], padding="max_length", truncation=True, max_length=512)

tokenized_dataset = dataset.map(tokenize, batched=True)

tokenized_dataset.set_format(type='torch', columns=['input_ids', 'attention_mask'])

# Training

training_args = TrainingArguments(

output_dir="./output",

per_device_train_batch_size=2,

per_device_eval_batch_size=2,

num_train_epochs=3,

logging_steps=10,

evaluation_strategy="epoch",

save_strategy="epoch",

logging_dir="./logs",

save_total_limit=1,

fp16=torch.cuda.is_available(),

)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=tokenized_dataset["train"],

eval_dataset=tokenized_dataset["test"],

tokenizer=tokenizer,

)

trainer.train()

3. Dockerfile

FROM python:3.10

ENV HF_HOME=/cache/huggingface

ENV TRANSFORMERS_CACHE=/cache/huggingface/transformers

ENV HF_DATASETS_CACHE=/cache/huggingface/datasets

ENV PIP_CACHE_DIR=/cache/pip

WORKDIR /app

# Install system packages

RUN apt update && apt install -y git

# Pre-install dependencies to enable caching

COPY requirements.txt .

RUN pip install --cache-dir=$PIP_CACHE_DIR -r requirements.txt

COPY . .

CMD ["python", "train.py"]

4. The requirements.txt File

transformers

datasets

torch

5. Podman Compose YAML

version: "3"

services:

smollm-trainer:

build: .

volumes:

- ./data:/app/data

- ./output:/app/output

- ./cache:/cache # Cache for Hugging Face and pip

command: ["python", "train.py"]

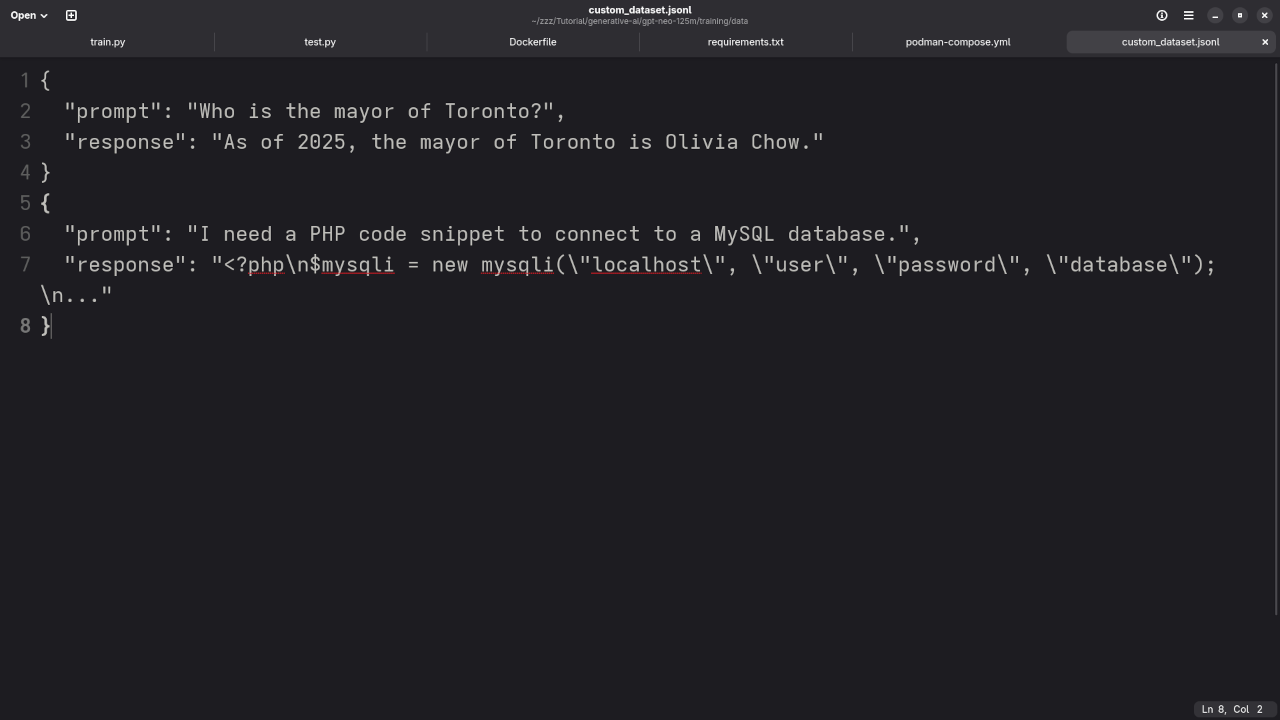

6. Example Open Source Dataset

We’re using a simple JSONL format. Here’s an example from our data/custom_dataset.jsonl file:

[json]

{

“prompt”: “Who is the mayor of Toronto?”,

“response”: “As of 2025, the mayor of Toronto is Olivia Chow.”

}

{

“prompt”: “I need a PHP code snippet to connect to a MySQL database.”,

“response”: “

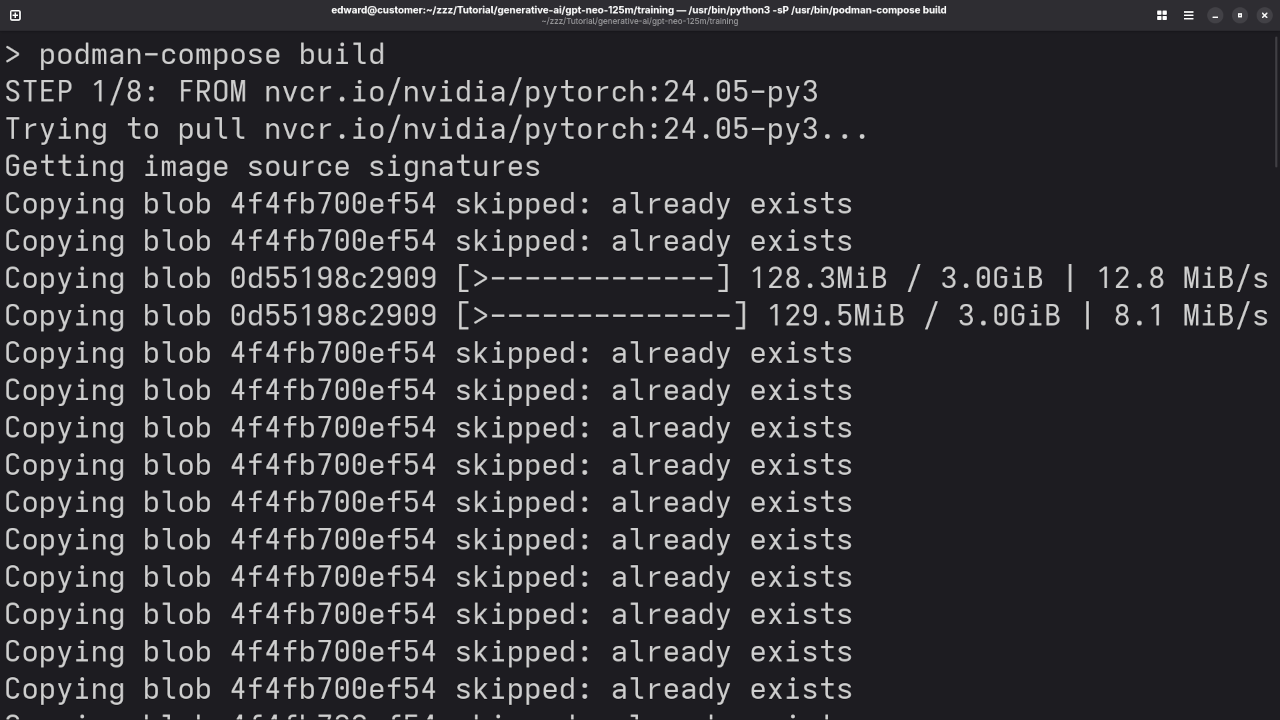

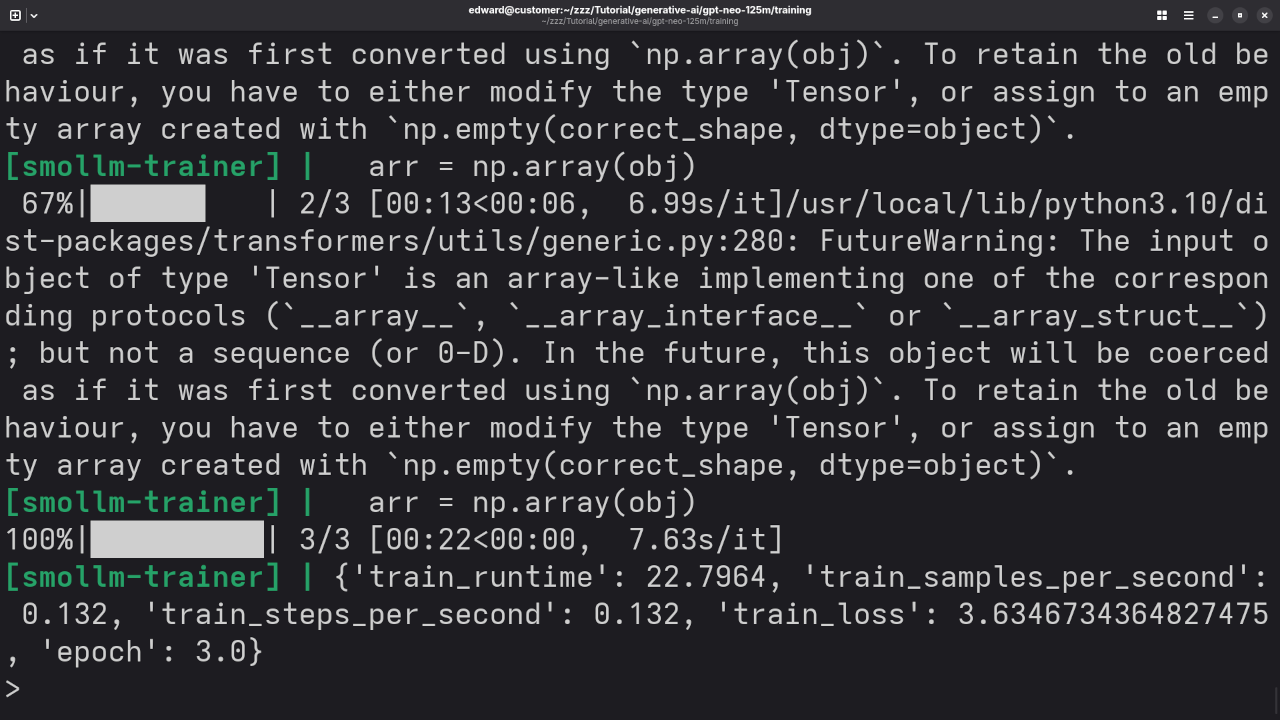

6. Build and Run with Podman Compose

mkdir -p data output cache

podman-compose build

podman-compose up

This downloads the base GPT-Neo model, loads your dataset, and starts training — all inside a secure, isolated container.

7. Inference Example (Optional)

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("./output")

model = AutoModelForCausalLM.from_pretrained("./output")

prompt = "Who is the mayor of Toronto?"

inputs = tokenizer(prompt, return_tensors="pt")

outputs = model.generate(**inputs, max_new_tokens=100)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

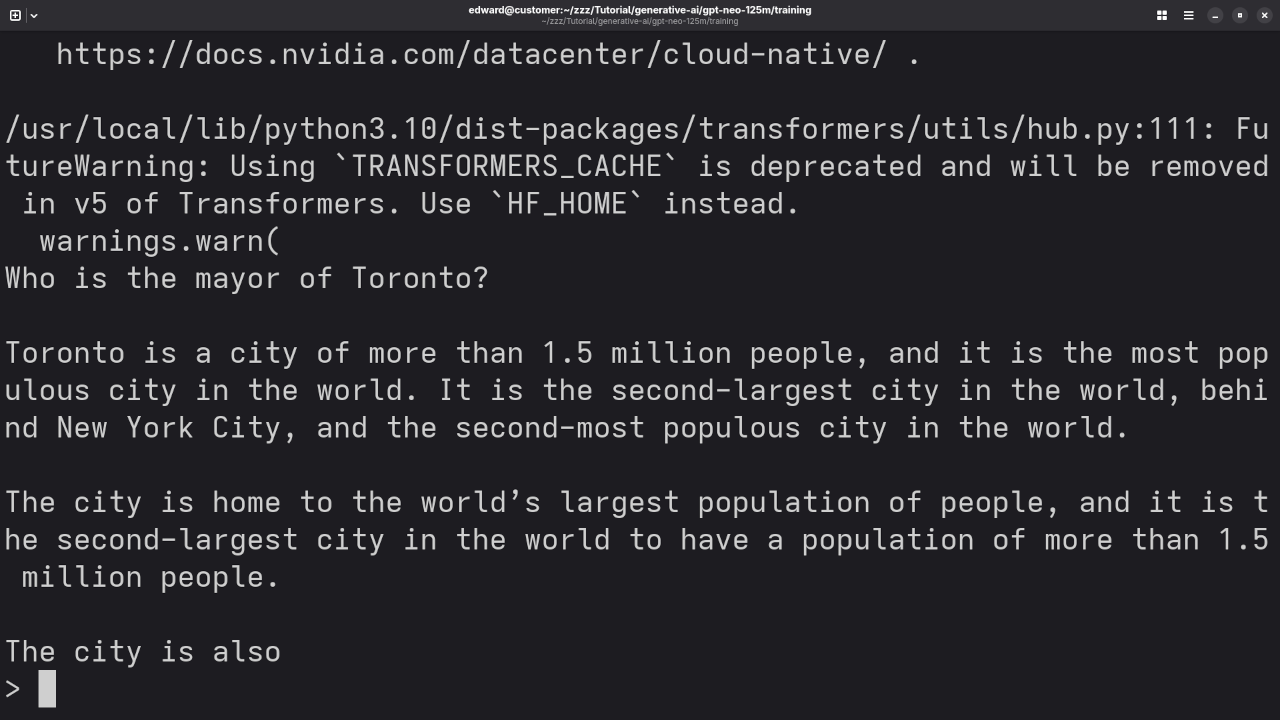

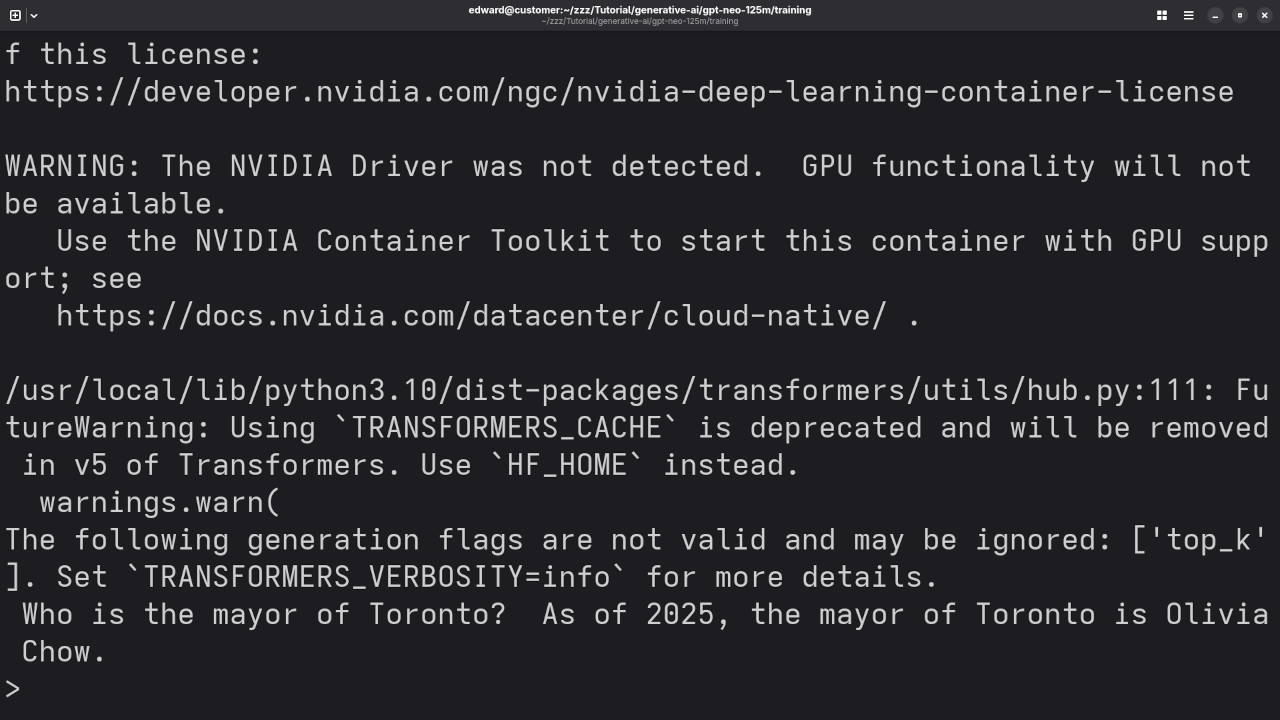

Screenshots & Screencast

Before and After: Results

Output Before Training (default GPT-Neo)

Prompt: Who is the mayor of Toronto?

Response: I’m not sure.

Prompt: I need a PHP code snippet to connect to MySQL.

Response: Incoherent or missing

Output After Fine-Tuning

Prompt: Who is the mayor of Toronto?

Response: As of 2025, the mayor of Toronto is Olivia Chow.

Prompt: I need a PHP code snippet to connect to MySQL.

Response:

<?php

$mysqli = new mysqli("localhost", "user", "password", "database");

if ($mysqli->connect_error) {

die("Connection failed: " . $mysqli->connect_error);

}

echo "Connected successfully";

?>

Additional Resources

If you’re just starting out with Python or want to deepen your understanding, check out my beginner-friendly book and course:

📚 Book:

Learning Python (eBook on Amazon)

🎓 Course:

Learning Python (Ojambo Shop)

Need Help?

I’m available for:

- 💻 One-on-one online Python tutorials: https://ojambo.com/contact

- 🤖 GPT-Neo installation, fine-tuning, or migration: https://ojamboservices.com/contact

Feel free to reach out and let’s bring AI to your next project!

Conclusion

With open source models like GPT-Neo and tools like Podman Compose, training your own AI assistant is more accessible than ever. Whether you’re creating a chatbot, a code assistant, or a personal knowledge base — it all starts with training.

Have questions or want to share your setup? Drop a comment below or get in touch!

🚀 Recommended Resources

Disclosure: Some of the links above are referral links. I may earn a commission if you make a purchase at no extra cost to you.