Tag: Local LLM

-

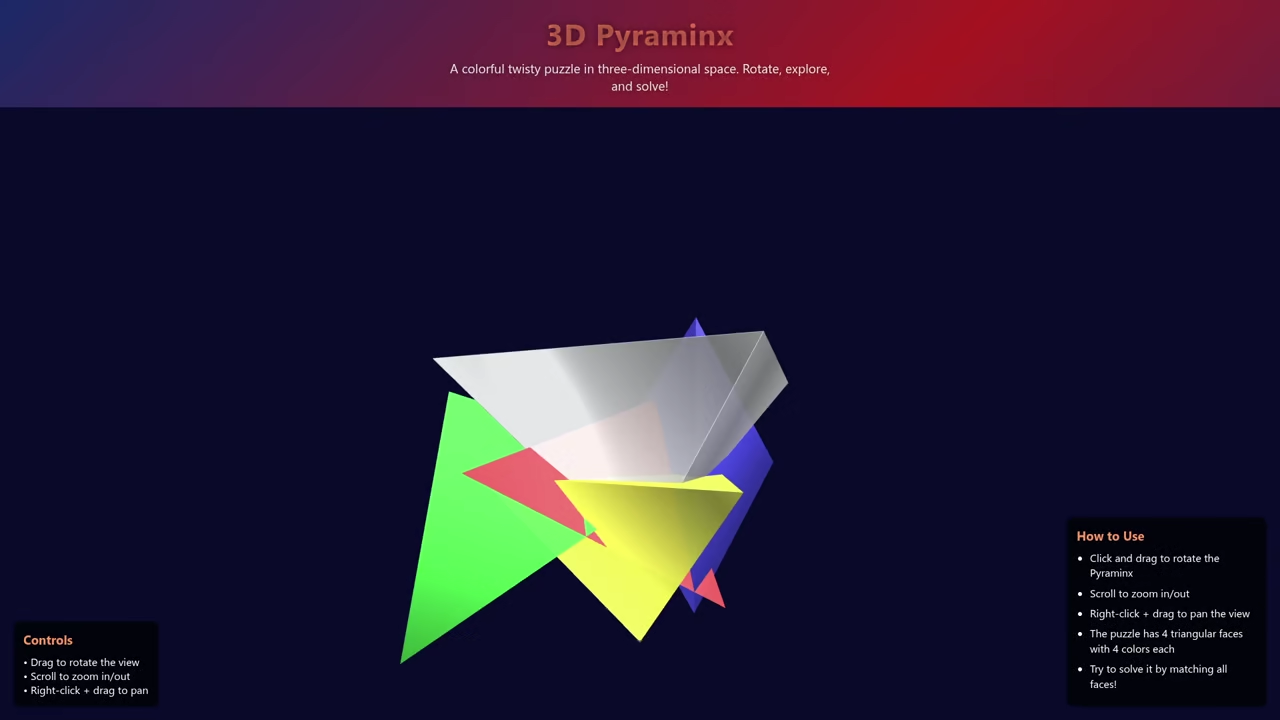

Coding the Pyraminx with Local AI on Fedora

Introduction This tutorial builds a Pyraminx puzzle on Fedora 43.

Written by

-

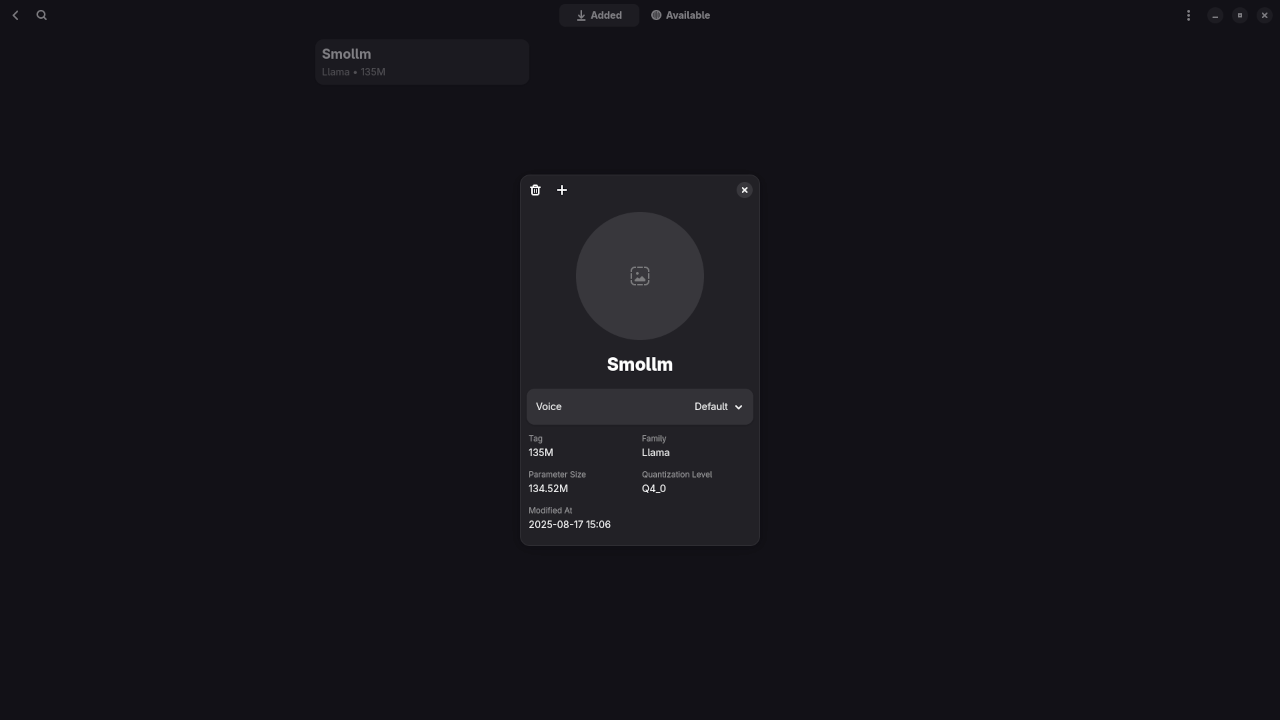

Review Generative AI Smollm 135M Model

Getting Started with Smollm 135M LLM on Alpaca Ollama Client (Open Source Guide) If you’re curious about running large language models (LLMs) locally and efficiently, this guide will walk you through setting up Smollm 135M with the Alpaca Ollama client.

Written by

-

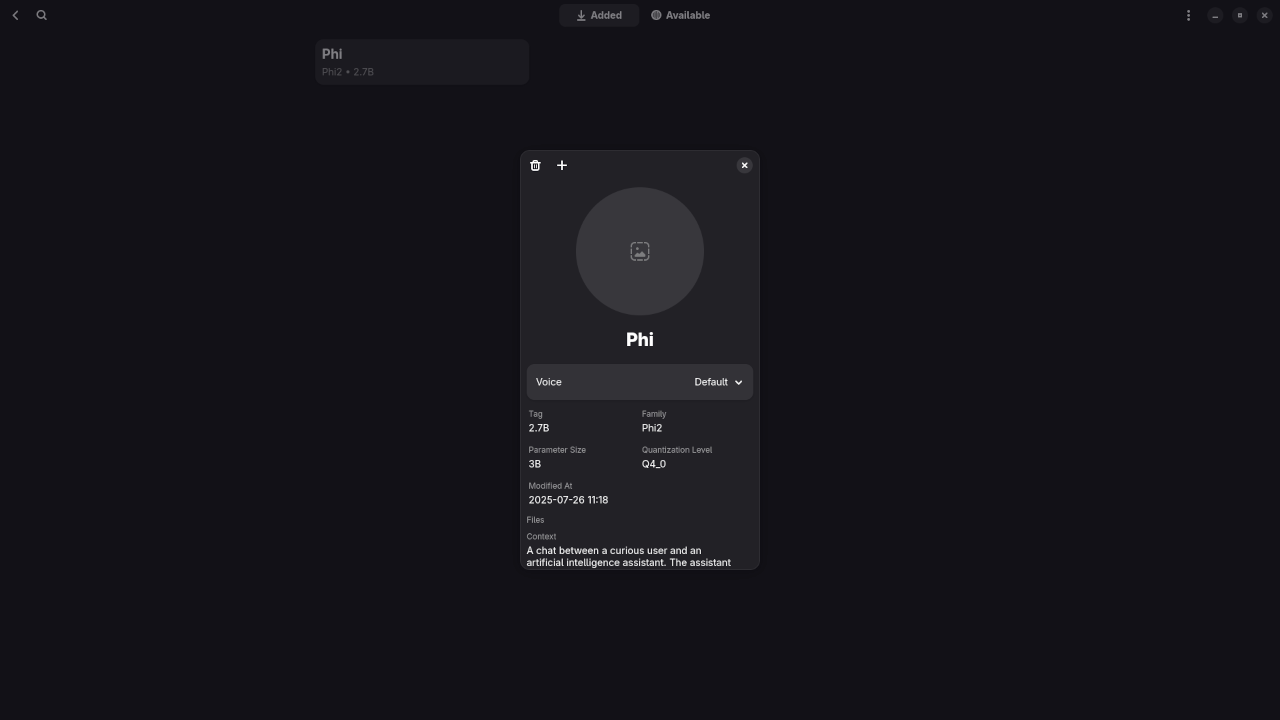

Review Generative AI Phi 2.7B Model

How to Run Phi 2.7B Locally Using Alpaca (Ollama Client) If you’re interested in experimenting with powerful AI models on your own machine, Phi 2.7B is a great starting point.

Written by

-

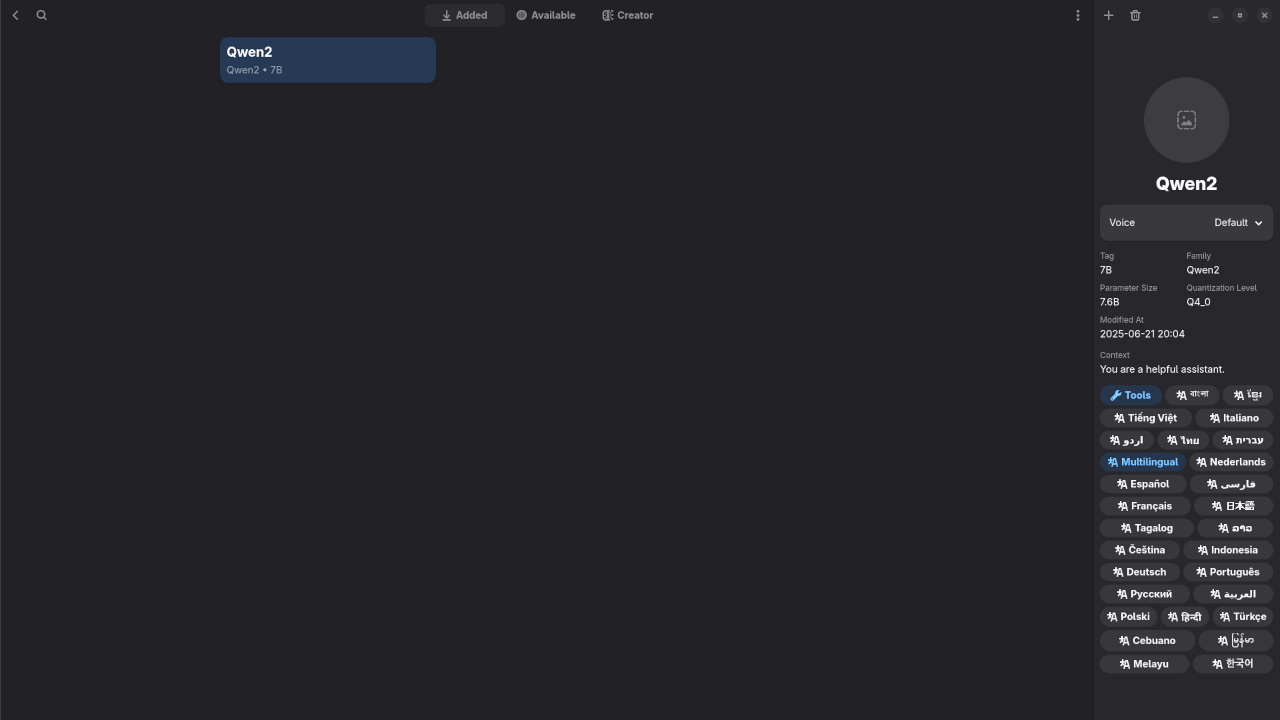

Review Generative AI Qwen2 7b Model

🚀 Exploring Qwen2: A Powerful Open LLM – Installation, Testing with Alpaca + Screencast!

Written by