How to Run Ollama with DeepSeek-R1 32B LLM on Fedora 42 – Open Source AI for Everyone

Introduction

In this post, we will walk through the steps to get Ollama running on your system, with a special focus on using the DeepSeek-R1 32B LLM. This open-source model offers an impressive combination of text generation and deep learning power, and it is easy to integrate into your own projects. Whether you are a beginner or a seasoned developer, you will be able to follow along.

This guide includes step-by-step instructions for installing the necessary dependencies on Fedora 42, along with an example Python script that interacts with the Ollama API to run the DeepSeek-R1 32B model.

What is Ollama and DeepSeek-R1 32B?

Ollama is a platform designed for running and interacting with various AI models locally. It is open-source and highly flexible, making it perfect for running Large Language Models (LLMs) like DeepSeek-R1 32B.

- DeepSeek-R1 32B is a powerful 32 billion parameter language model that can be used for tasks like natural language generation, question answering, summarization, and more.

- Open Source License: DeepSeek-R1 is released under the MIT License, which means you can use, modify, and distribute it freely.

Installation Guide for Fedora 42

Step 1: Install Dependencies

To get started, install Ollama and the required dependencies:

# Install Python 3 and pip

sudo dnf install python3 python3-pip

# Install other necessary tools

sudo dnf install git curl

# Install the Ollama client

pip install ollama

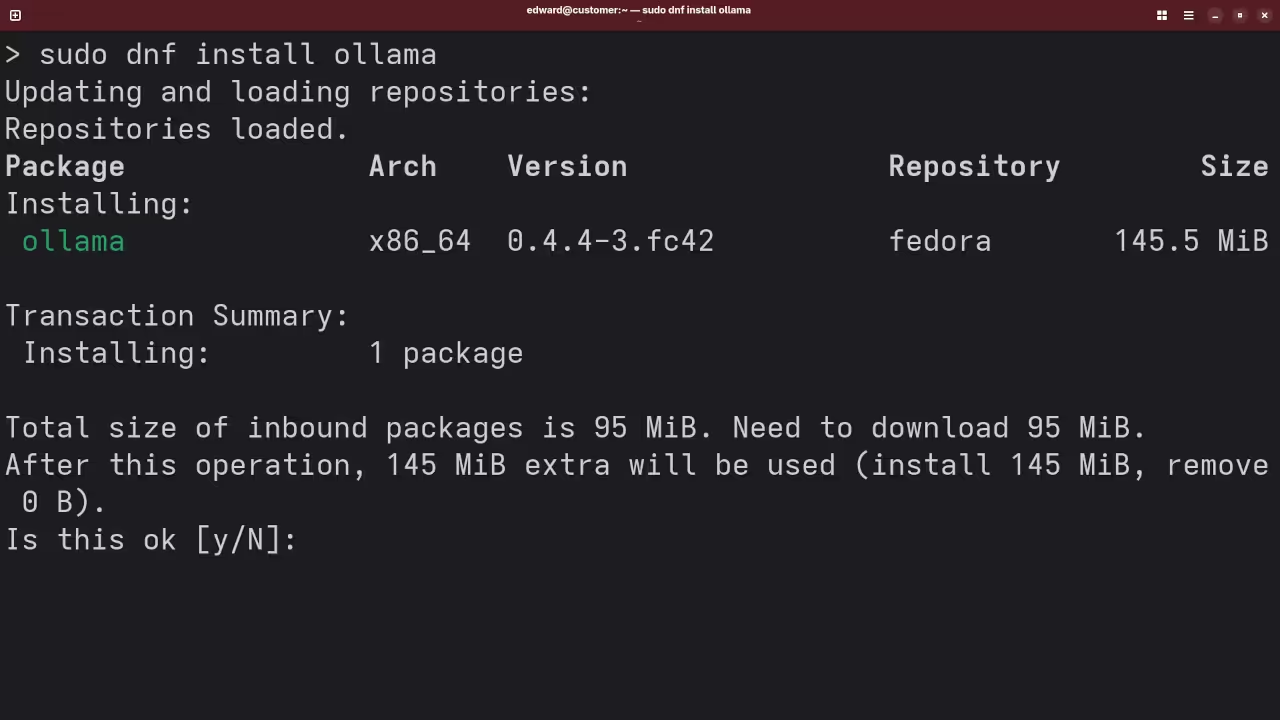

Fedora 42 includes Ollama in the official repositories. You can install it easily using the system package manager.

# 1. Update your system

sudo dnf update -y

# 2. Install Ollama

sudo dnf install -y ollama

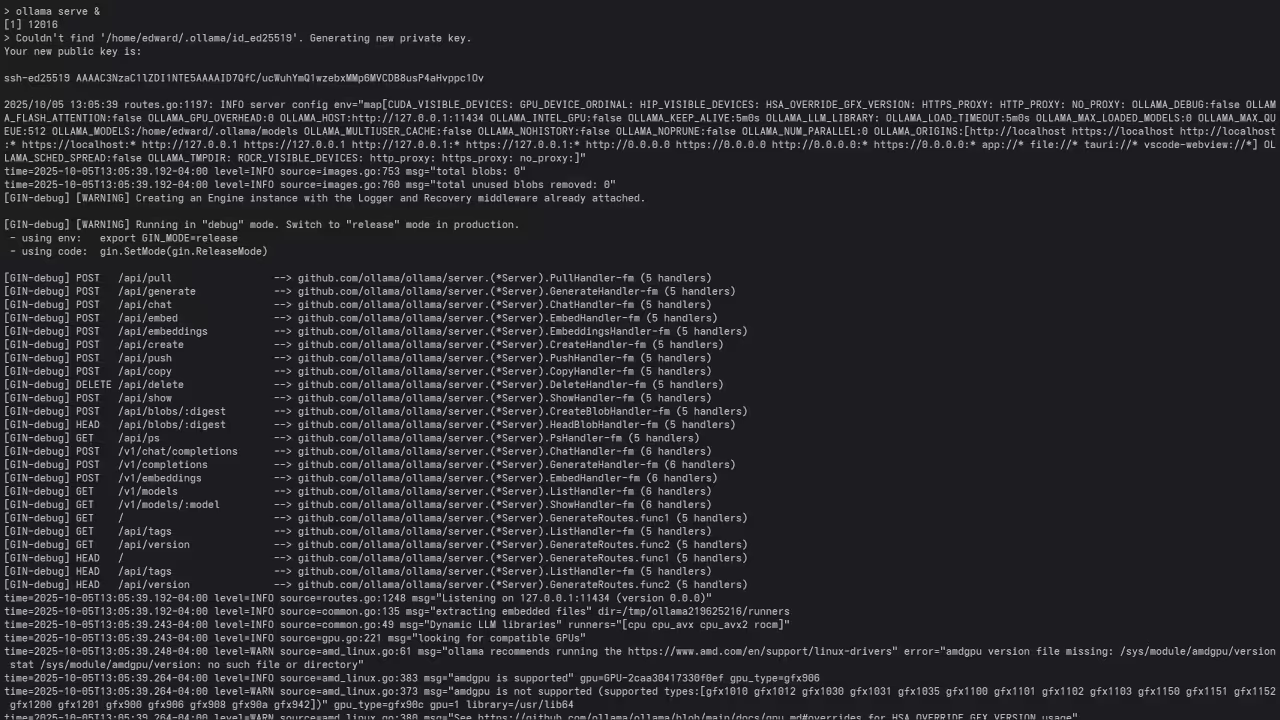

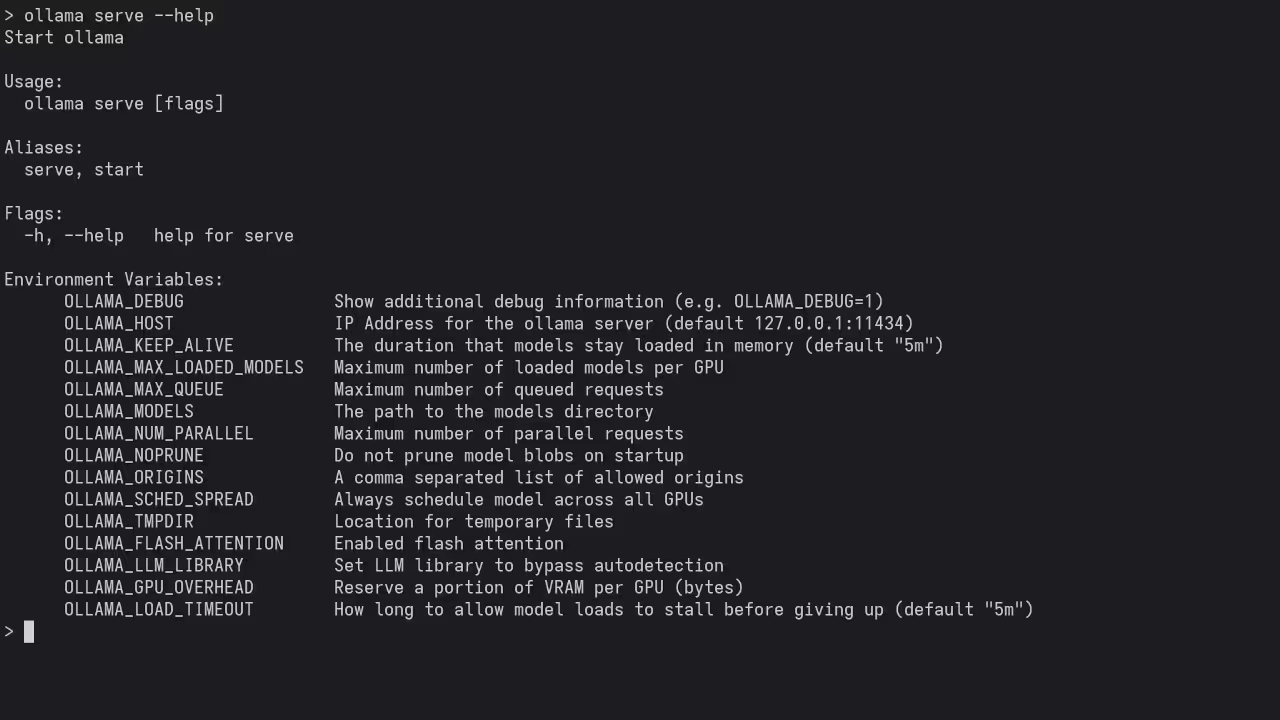

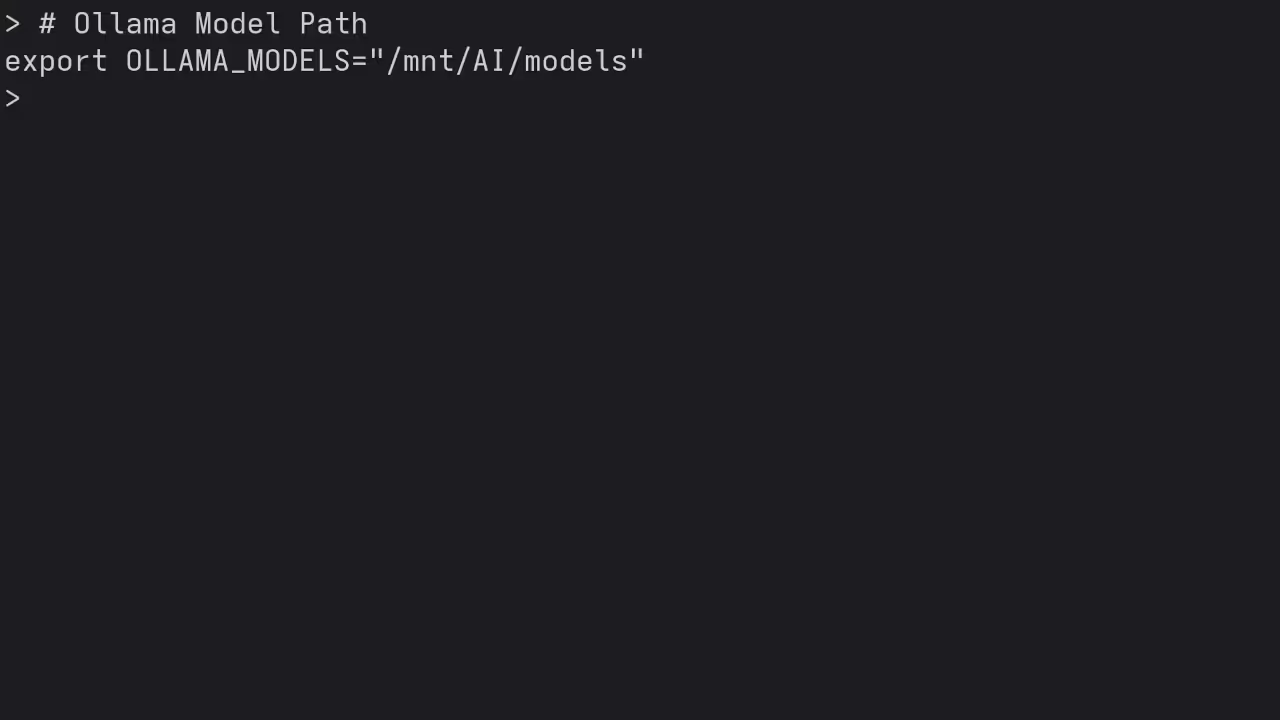

# 3. Start the Ollama service

ollama serve &Ollama makes it incredibly simple to run LLMs locally with minimal setup, providing an intuitive CLI and REST API to interact with models like Meta’s LLaMA.

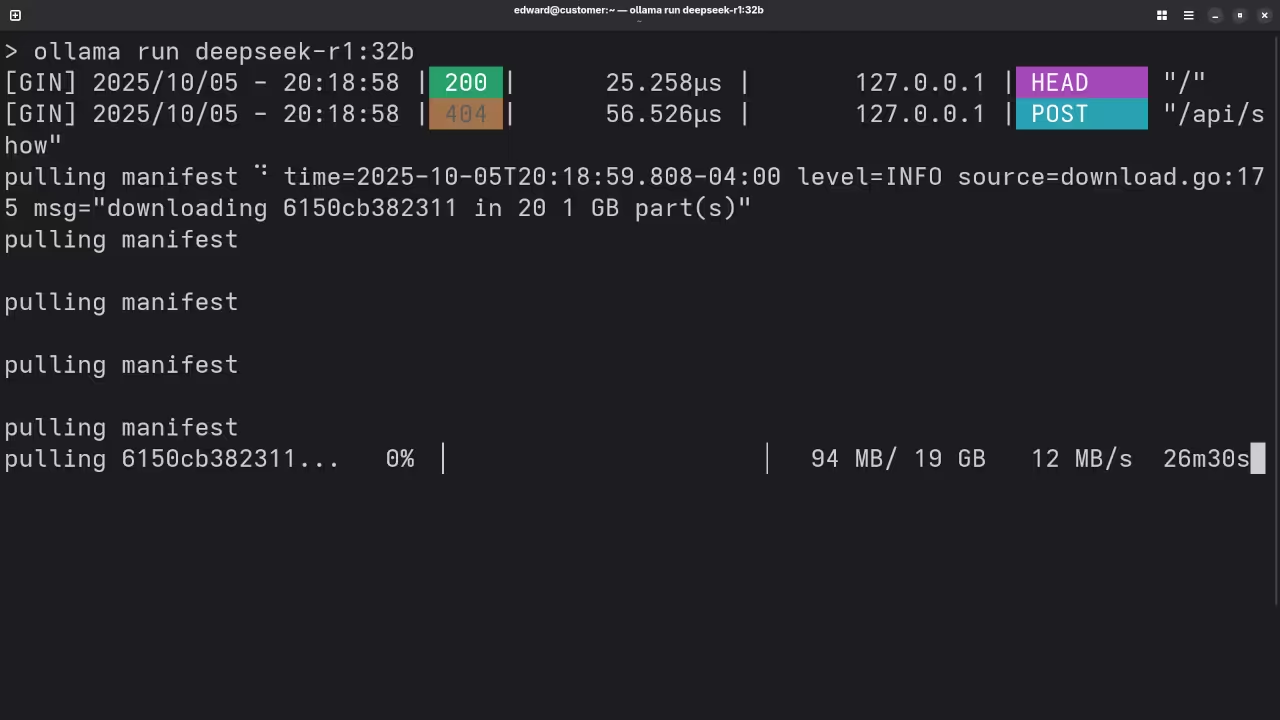

Step 2: Install DeepSeek-R1 32B Model

Download and set up the model locally:

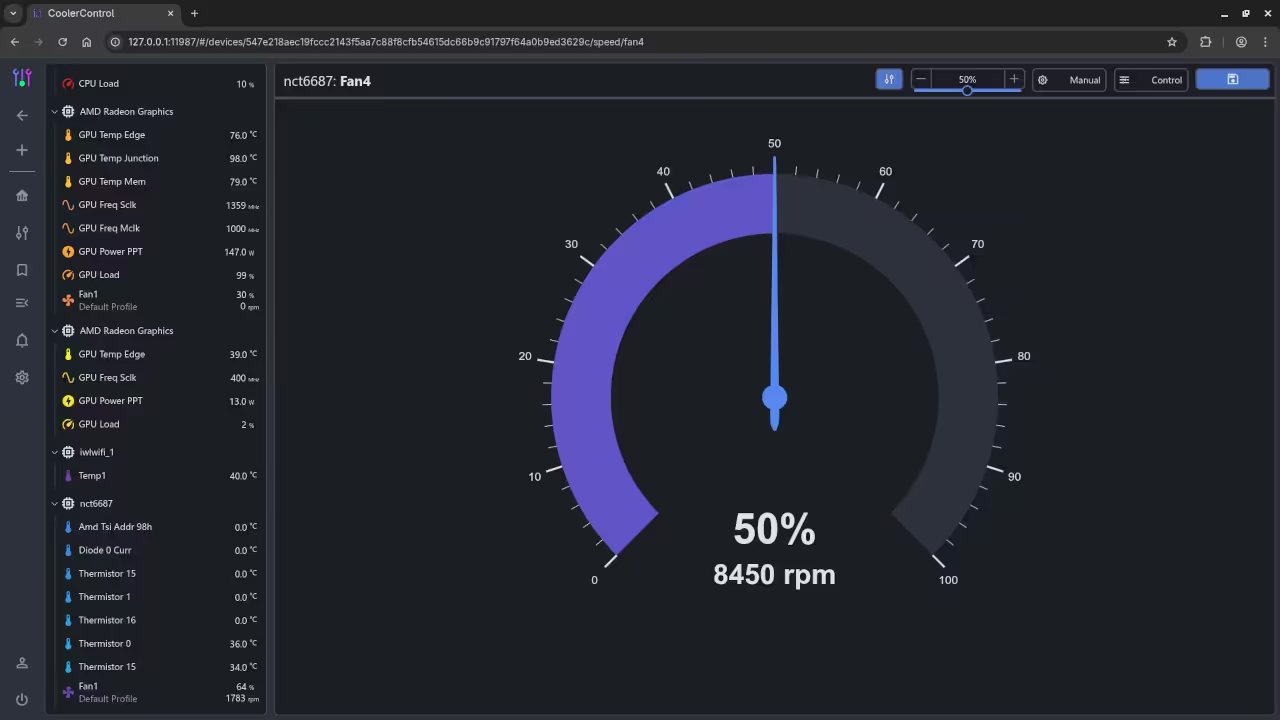

ollama pull deepseek-r1:32bStep 3: Increase System Swap Space (If Needed)

To prevent memory errors, increase swap space:

# Check current swap space

swapon --show

# Increase swap size by 4GB

sudo dd if=/dev/zero of=/swapfile bs=1M count=4096

sudo mkswap /swapfile

sudo swapon /swapfile

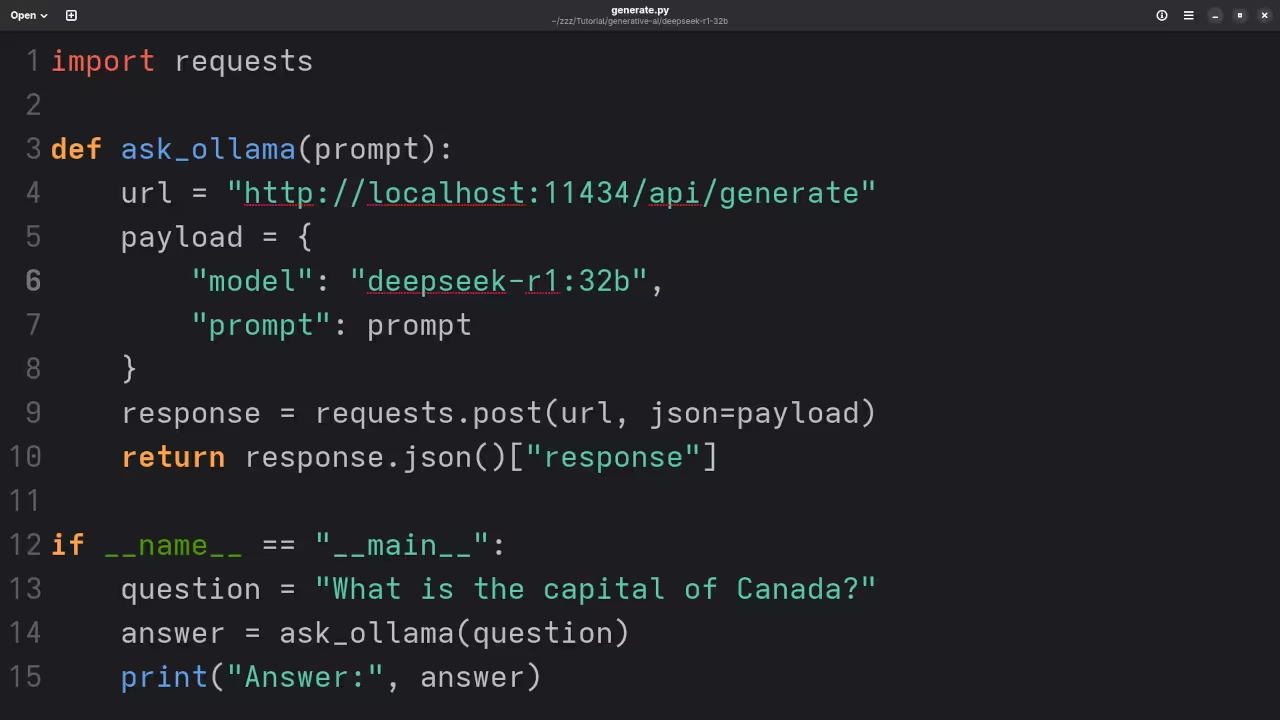

Using the Ollama API with Python

Below is a sample Python script that sends a prompt to the Ollama API and displays the response:

import requests

def ask_ollama(prompt):

url = "http://localhost:11434/api/generate"

payload = {

"model": "deepseek-r1:32b",

"prompt": prompt

}

response = requests.post(url, json=payload)

try:

return response.json()["response"]

except Exception as e:

print(f"Error: {e}")

return None

if __name__ == "__main__":

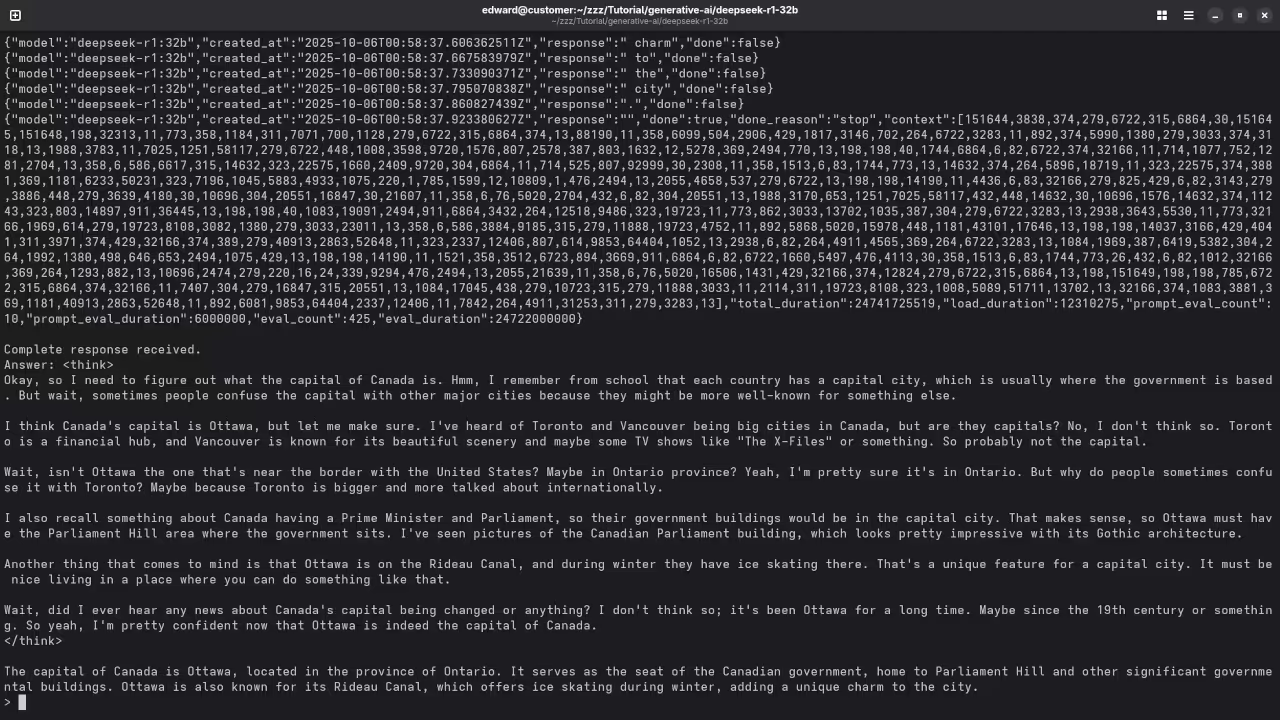

question = "What is the capital of Canada?"

answer = ask_ollama(question)

if answer:

print("Answer:", answer)

else:

print("No answer returned.")

Explanation

This script sends a prompt to the local Ollama server using the DeepSeek-R1 32B model. It handles basic error checking and prints the model’s response.

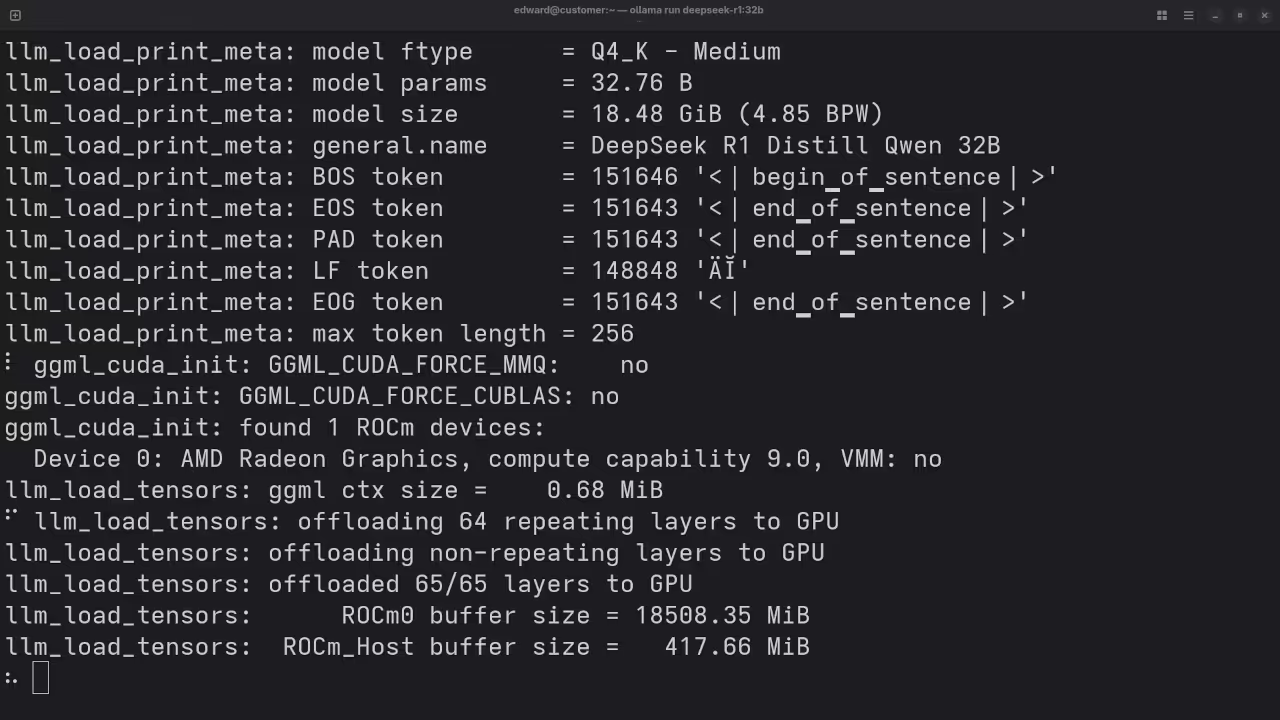

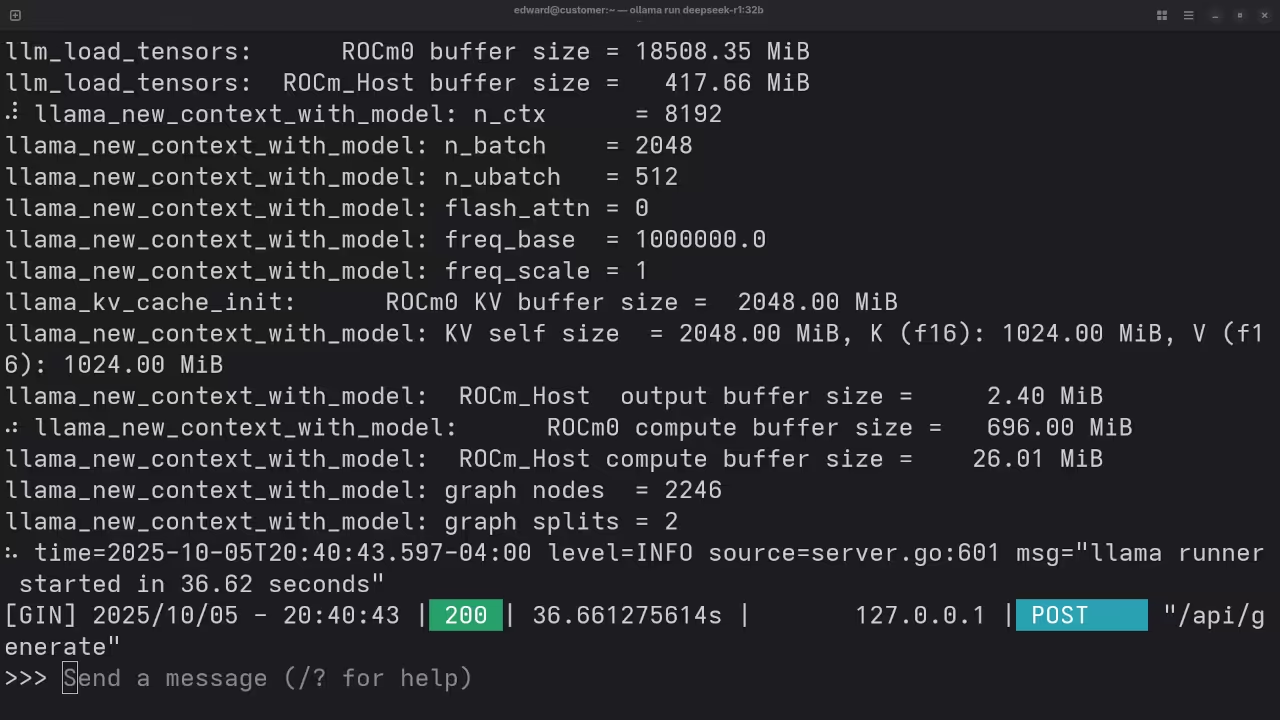

Screenshots and Screencast

Here’s where you’ll find a visual walkthrough of setting up DeepSeek-R1 32B using Alpaca Ollama on your local system:

Results:

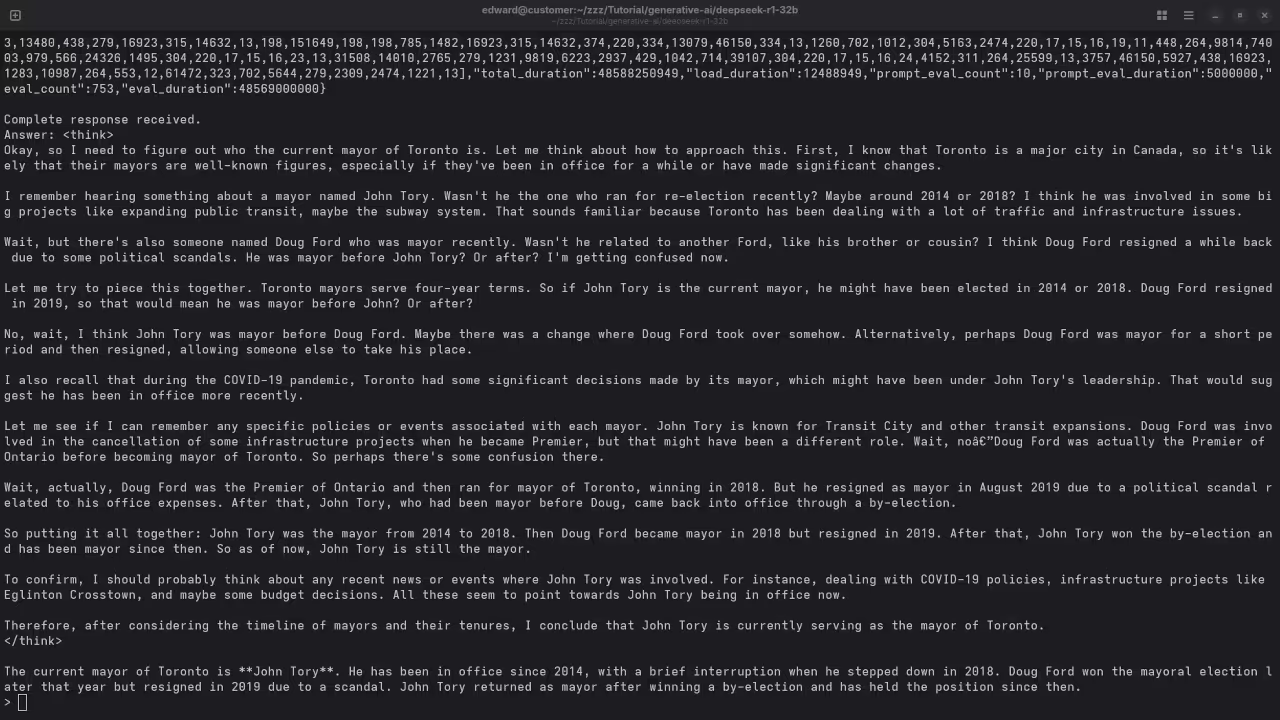

Who is the mayor of Toronto?

Produced inaccurate outdated answer to Olivia Chow as the mayor of Toronto.

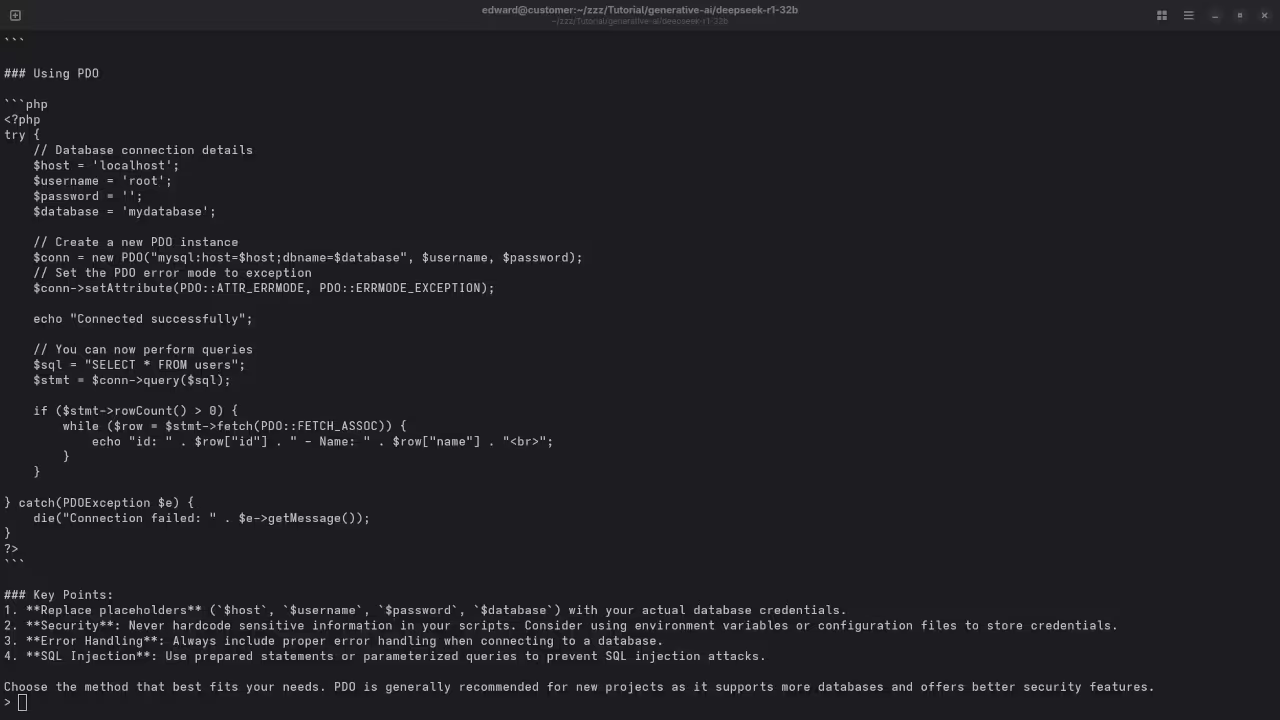

I need a PHP code snippet to connect to a MySQL database.

Produced correct syntax PHP code snippet to connect to a MySQL database.

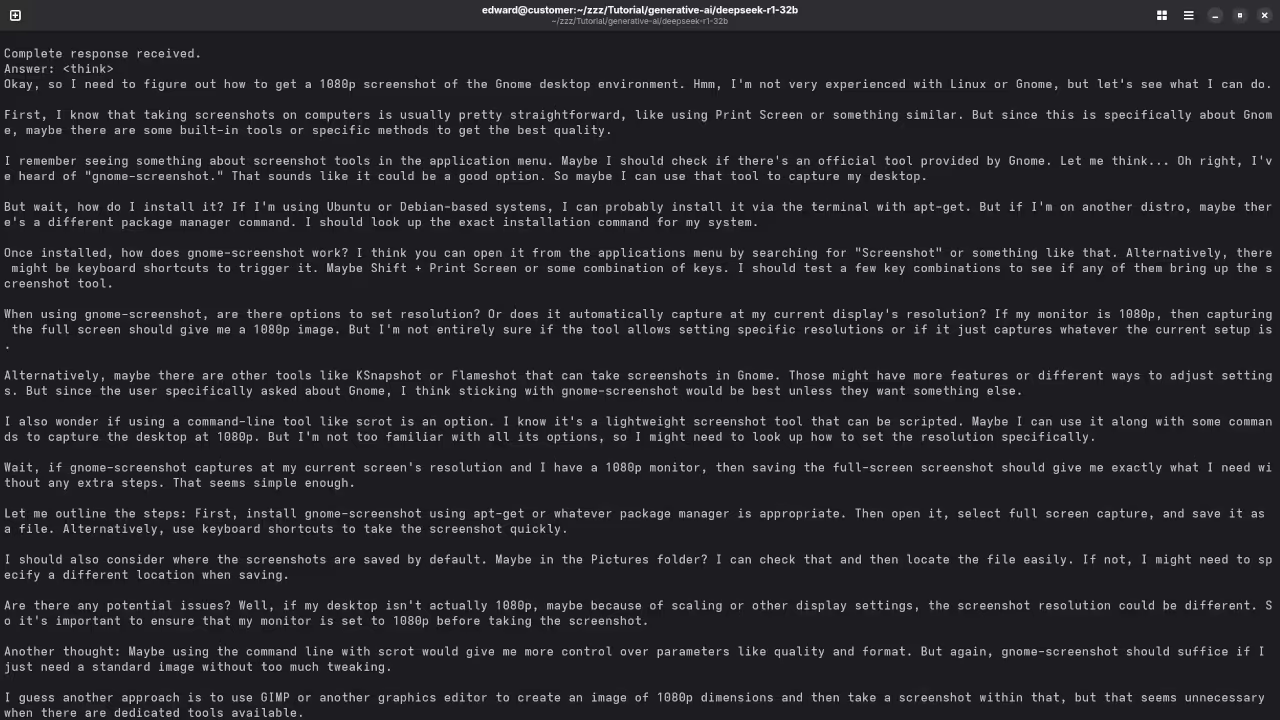

I need a 1080p screenshot of the gnome desktop environment.

Accurately provided instructions to generate a 1080p screenshot of Gnome desktop environment because it is a text-based AI lacking ability.

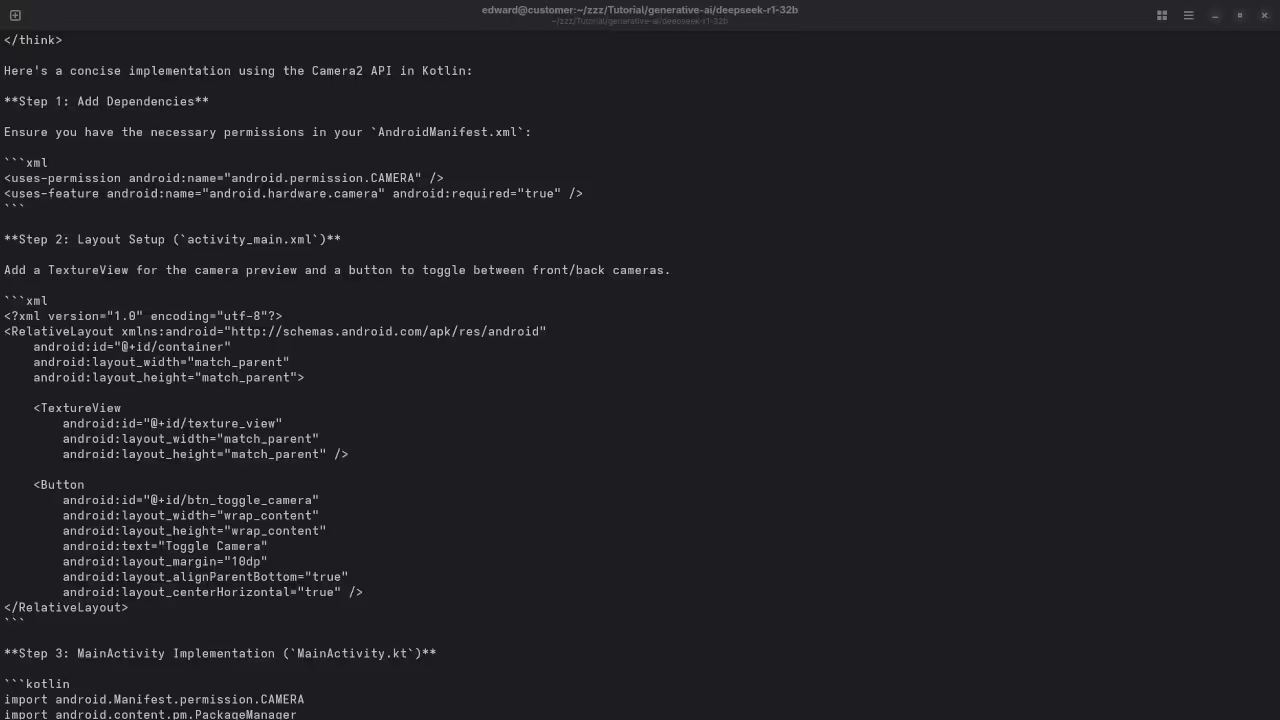

I need a kotlin code snippet to open the camera using Camera2 API and place the camera view on a TextureView.

Produced untested Kotlin code snippet.

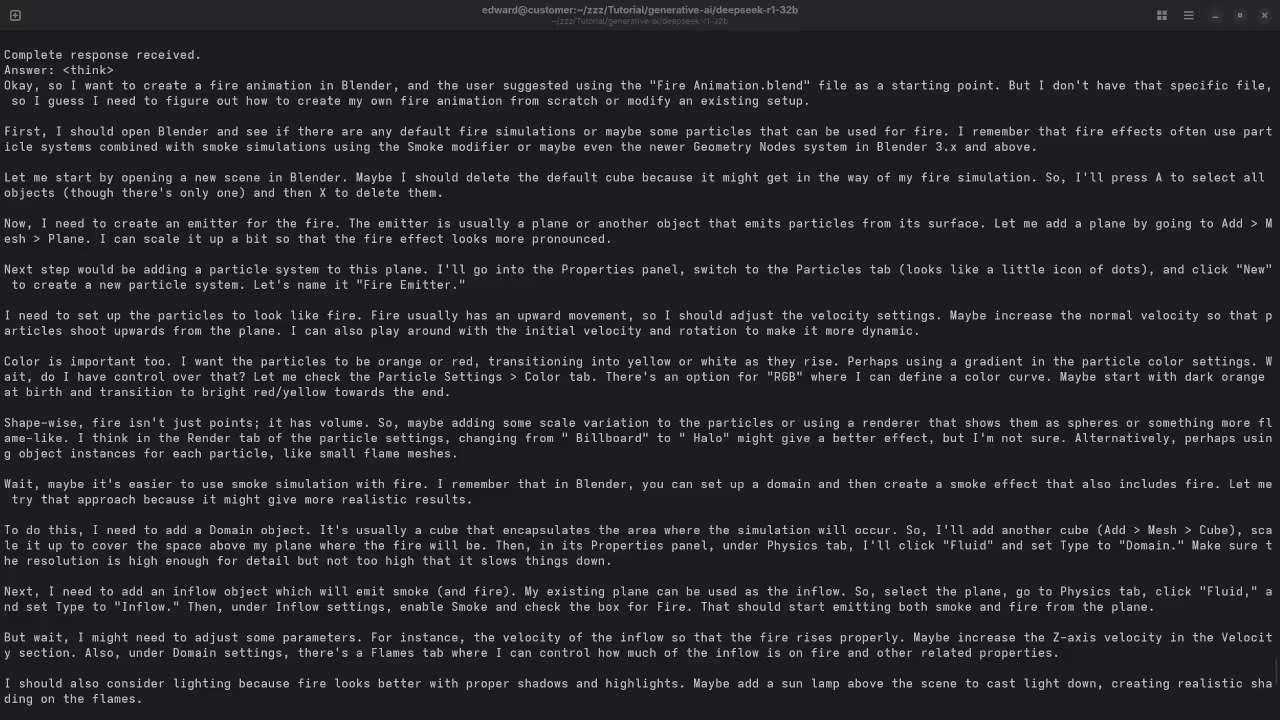

I need a blender blend file for fire animation.

Accurately detected inability to generate Blender Blend file for a fire animation because it is a text-based AI lacking ability.

Results

Once you run the Python script, you should see output like this:

Answer: The capital of Canada is Ottawa.This confirms that the DeepSeek-R1 32B model is working and responding correctly.

Additional Resources

- Book: Learning Python for Beginners

- Course: Learning Python Online Course

- One-on-One Tutorials: I offer personal Python tutoring. Contact me here.

- Model Installation & Custom Training: I can install DeepSeek-R1 32B or help train and migrate models. Hire me here.

Conclusion

Running DeepSeek-R1 32B with Ollama on Fedora 42 is possible and powerful. With just a few steps, you can start using advanced LLMs for local development, learning, or production use.

Whether you are writing a book, building an app, or training models, this setup puts full control in your hands.

🚀 Recommended Resources

Disclosure: Some of the links above are referral links. I may earn a commission if you make a purchase at no extra cost to you.