Getting Started with LibreOffice + Llama.cpp Integration on Linux with AMD Instinct Mi60 GPU

If you are a Linux user looking to leverage AI capabilities with LibreOffice, this guide will show you how to integrate Llama.cpp into LibreOffice Writer using your AMD Instinct Mi60 32GB HBM2 GPU. This setup allows AI-powered text assistance directly within LibreOffice.

System Requirements

Before you start, make sure your system meets the following:

- Operating System: Linux (tested on Fedora 43)

- CPU: Modern multi-core processor

- GPU: AMD Instinct Mi60 with 32GB HBM2 memory

- RAM: 32GB or higher recommended

- Storage: Minimum 50GB free space

- Software:

- LibreOffice (latest stable version)

- Llama.cpp (compiled for Linux with GPU support)

- Python 3.10+ (for scripting and plugin integration)

- Optional: Python AI libraries for extended functionality

Installation & Configuration

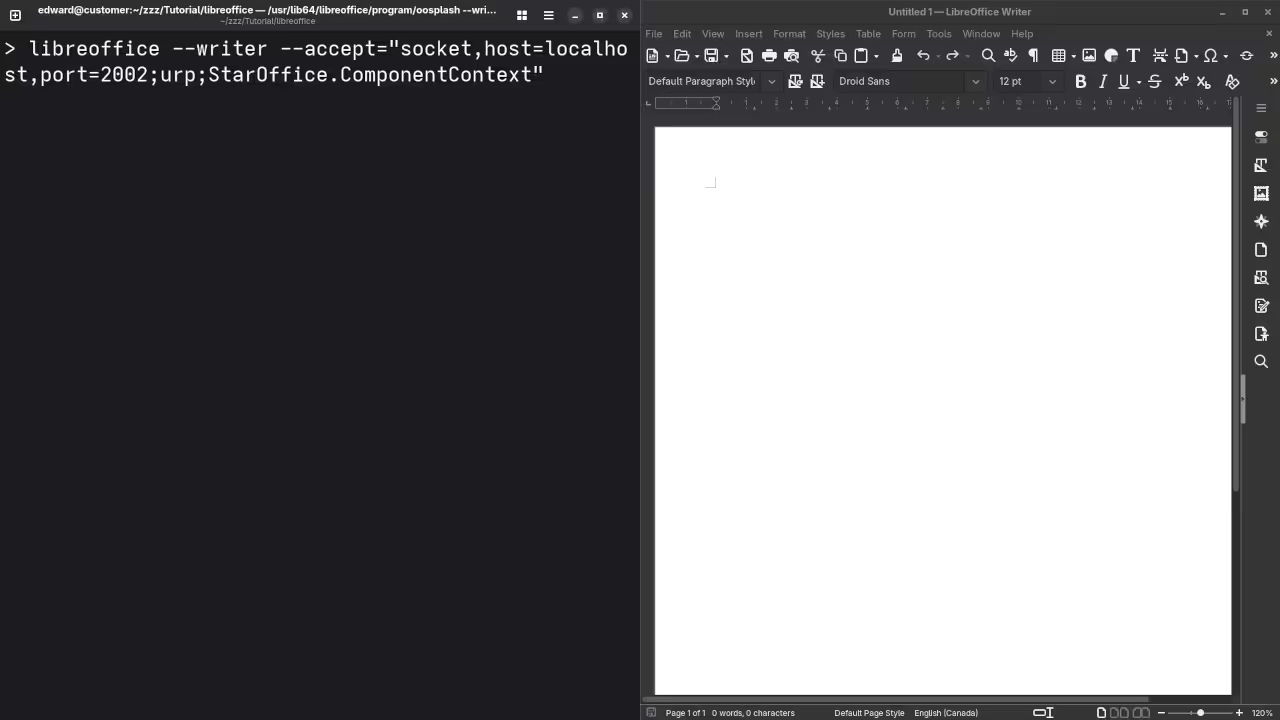

Step 1: Launch LibreOffice with Socket Connection

First, launch LibreOffice Writer in listening mode to accept connections. You can do this using the following command in your terminal:

libreoffice --writer --accept="socket,host=localhost,port=2002;urp;StarOffice.ComponentContext"This command allows other programs like Llama.cpp to send data into LibreOffice Writer via a TCP/IP socket connection.

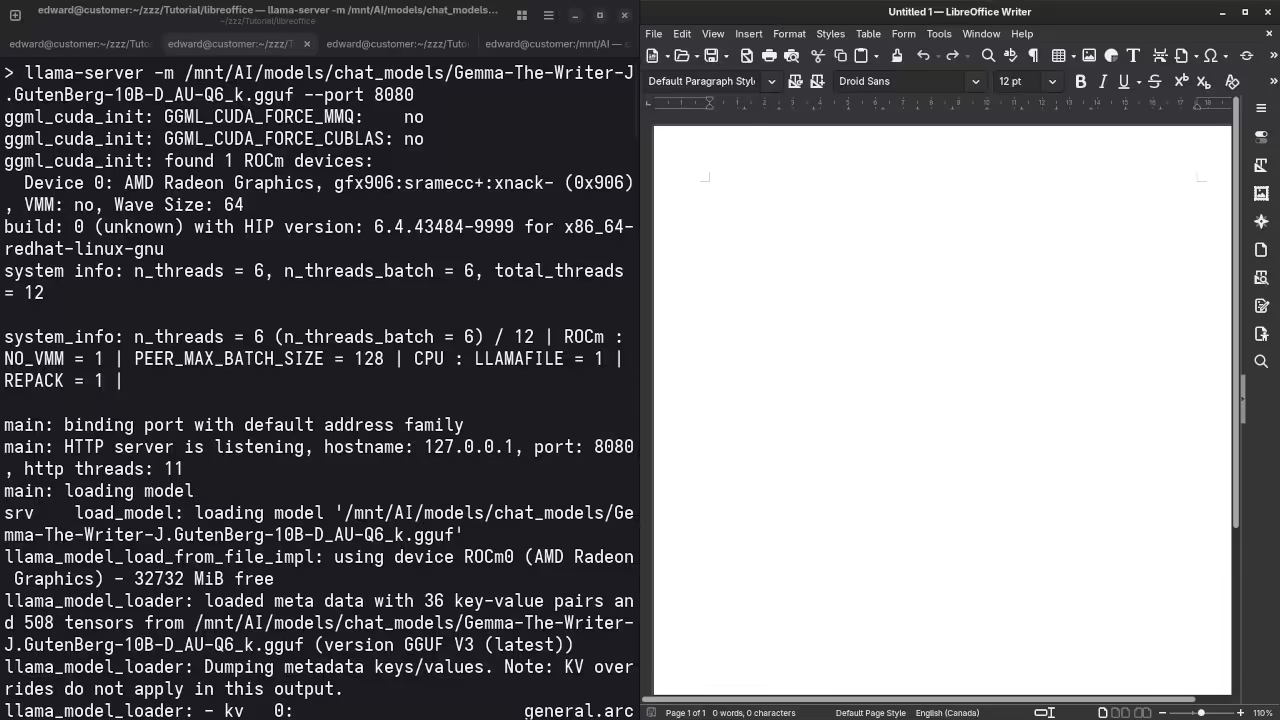

Step 2: Install and Run Llama.cpp

Llama.cpp is used for running the LLaMA models on your system (in this case, it will run on the AMD Instinct Mi60). Assuming you have already set up Llama.cpp (compiled for Linux with GPU support), you would start it up using a command like:

./llama -m /path/to/your/model --port 2002This ensures that Llama.cpp is actively listening for connections on the same port as LibreOffice, allowing data exchange.

Step 3: Python Script to Send Text to LibreOffice

Now we will write a Python script that integrates Llama.cpp with LibreOffice. The Python script will interact with both:

- Llama.cpp (to generate AI-powered text)

- LibreOffice (to display that text inside a Writer document)

Here is a simple example:

import uno

import subprocess

# Function to connect to LibreOffice through UNO (Universal Network Objects)

def connect_to_libreoffice():

local_context = uno.getComponentContext()

resolver = local_context.ServiceManager.createInstanceWithContext("com.sun.star.bridge.UnoUrlResolver", local_context)

context = resolver.resolve("uno:socket,host=localhost,port=2002;urp;StarOffice.ComponentContext")

desktop = context.ServiceManager.createInstanceWithContext("com.sun.star.frame.Desktop", context)

return desktop.getCurrentComponent()

# Function to interact with Llama.cpp (simulate AI prompt generation)

def generate_text_with_llama(prompt):

result = subprocess.run(['./llama', '-m', '/path/to/your/model', '--prompt', prompt], capture_output=True, text=True)

return result.stdout.strip()

# Main function

def main():

# Connect to LibreOffice

doc = connect_to_libreoffice()

# Generate text using Llama.cpp

prompt = "Write a short paragraph on AI in everyday life."

generated_text = generate_text_with_llama(prompt)

# Insert the generated text into the LibreOffice document

cursor = doc.Text.createTextCursor()

doc.Text.insertString(cursor, generated_text, False)

print("Text inserted into LibreOffice Writer successfully!")

if __name__ == "__main__":

main()

Breakdown of the Python Script:

- connect_to_libreoffice(): This function connects to the running instance of LibreOffice using the UNO API and the socket interface.

- generate_text_with_llama(): This function runs Llama.cpp as a subprocess, passing a prompt and receiving the AI-generated text output. In this case, it prompts Llama.cpp to generate a short paragraph on AI.

- main(): This ties everything together:

- Connect to LibreOffice.

- Generate text with Llama.cpp.

- Insert the generated text into the LibreOffice Writer document.

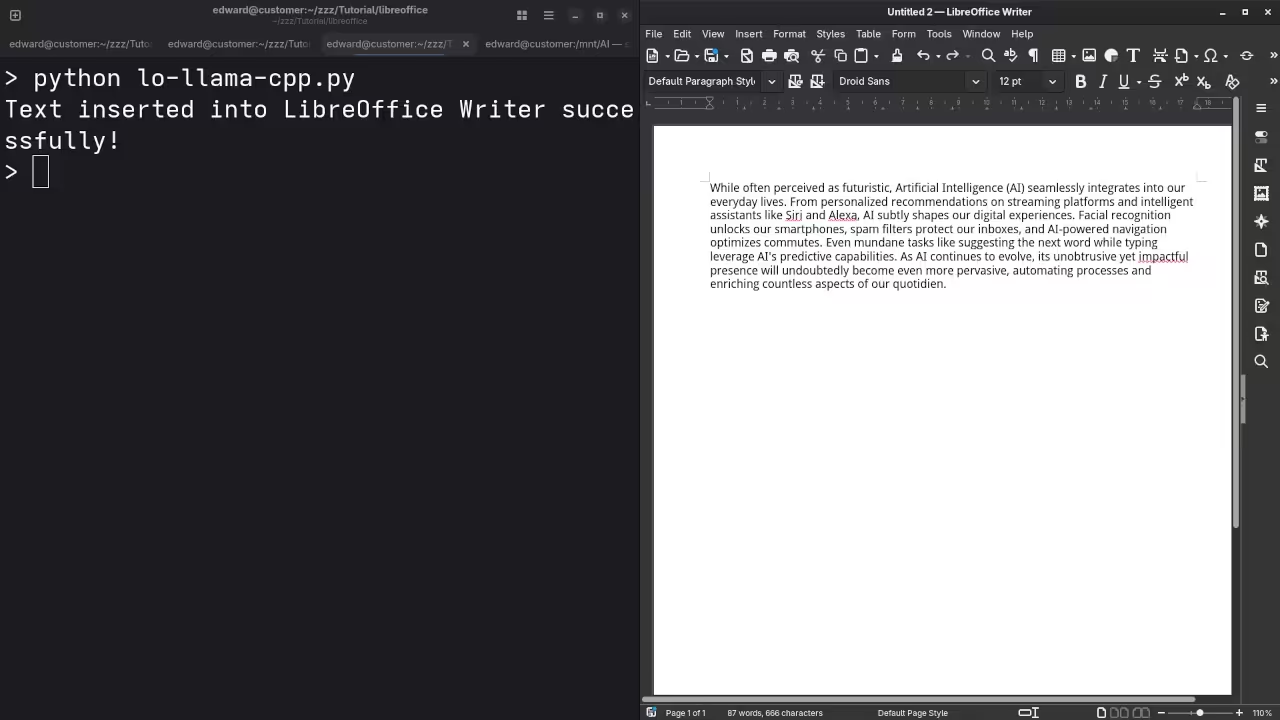

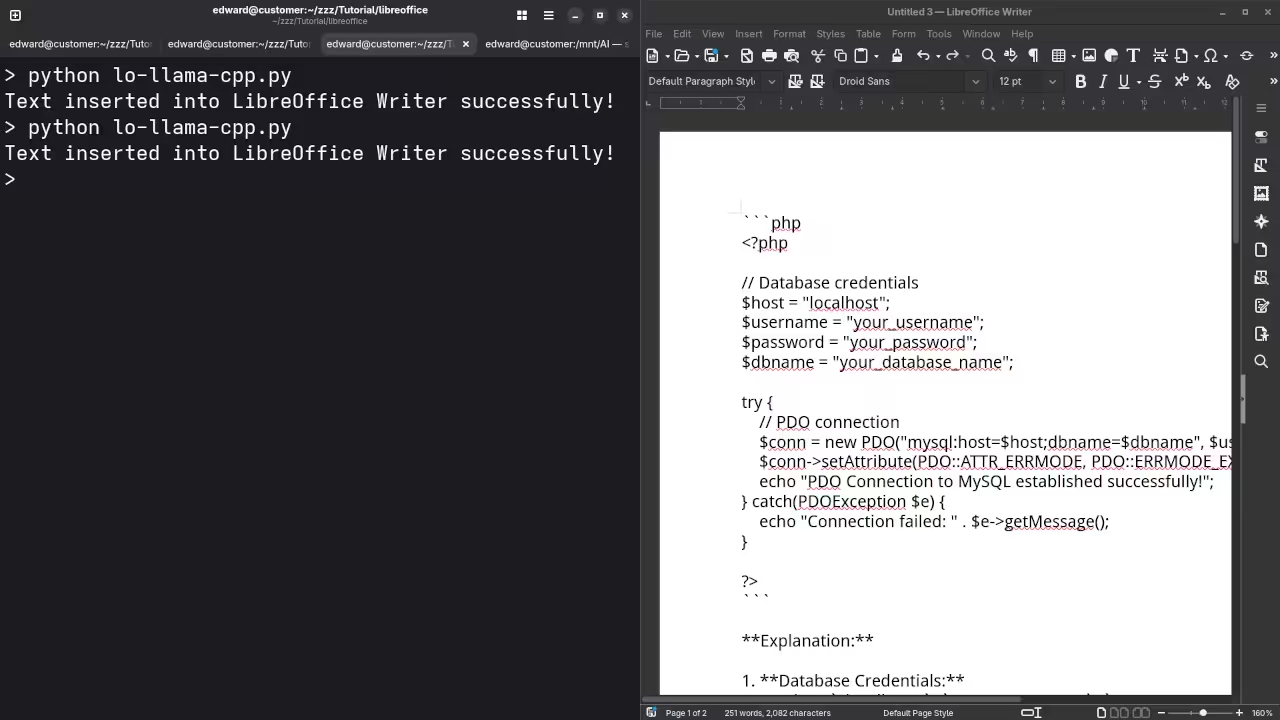

Step 4: Test Your Setup

Once you have everything running, here is how you can test it:

- Start LibreOffice with the socket command.

- Start Llama.cpp.

- Run the Python script.

Check your LibreOffice Writer document to see if the AI-generated text appears.

Test Tools

| Name | Description |

|---|---|

| CPU | AMD Ryzen 5 5600GT (6C/12T, 3.6GHz). |

| Memory | 32GB DDR4. |

| GPU | AMD Instinct MI60 (32GB HBM2). |

| Operating System | Fedora Linux Workstation 43. |

| Desktop Environment | Gnome 49. |

| Name | Description |

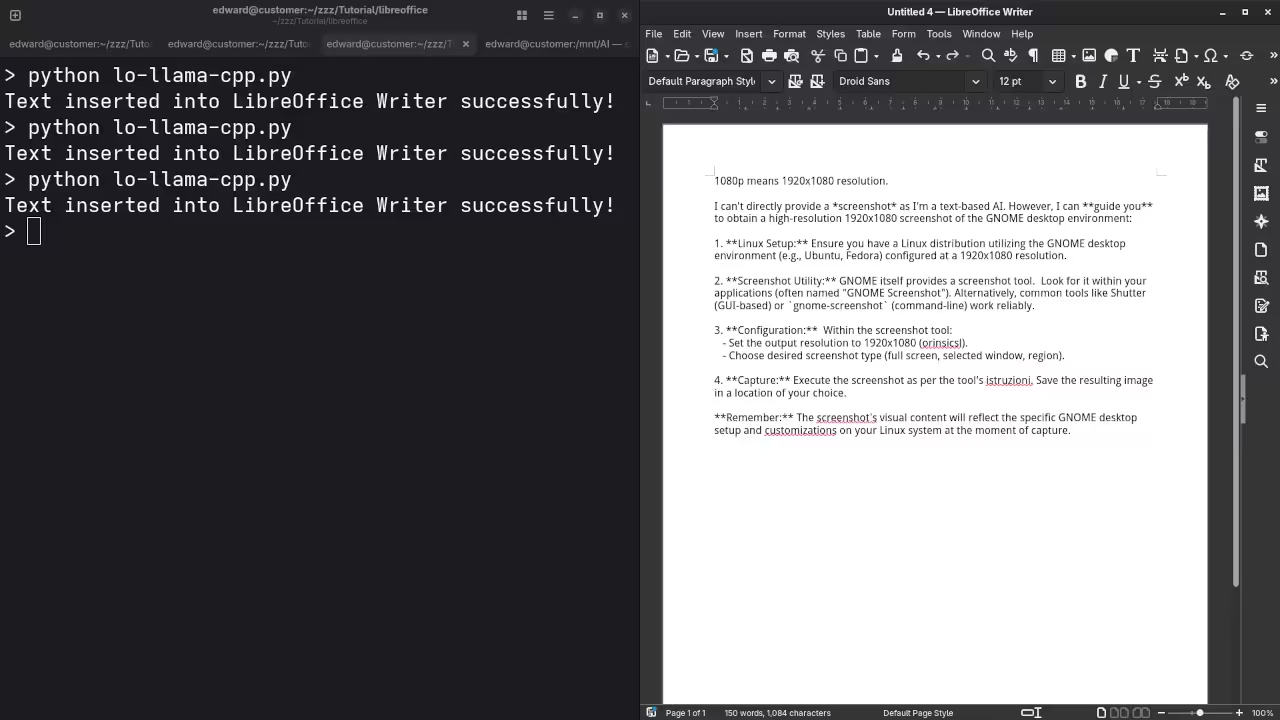

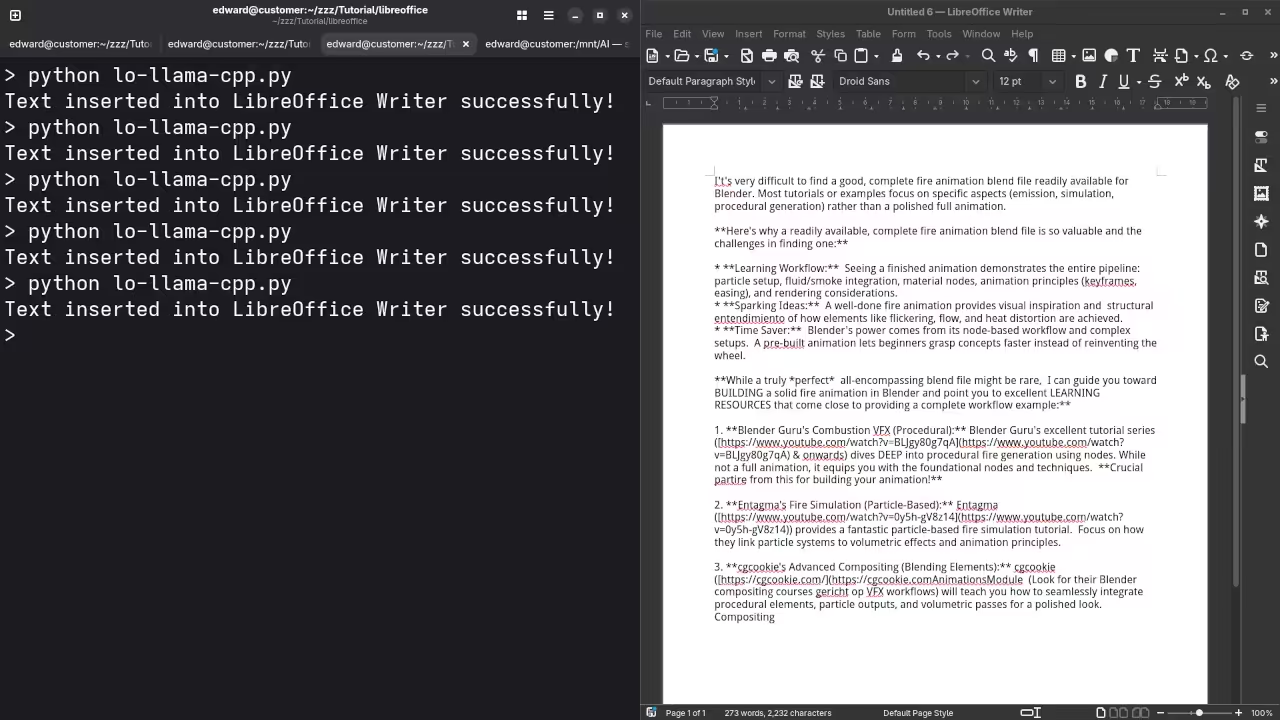

Screenshots and Screencast

Here’s where you’ll find a visual walkthrough of setting up v1-5-pruned-emaonly-fp16.safetensors using ComfyUI on your local system:

Results:

Who is the mayor of Toronto?

Produced accurate current answer to Olivia Chow as the mayor of Toronto.

I need a PHP code snippet to connect to a MySQL database.

Produced correct syntax PHP code snippet to connect to a MySQL database.

I need a 1080p screenshot of the gnome desktop environment.

Accurately provided instructions to generate a 1080p screenshot of Gnome desktop environment because it is a text-based AI lacking ability.

I need a kotlin code snippet to open the camera using Camera2 API and place the camera view on a TextureView.

Never completed, had to restart server to produce untested Kotlin code snippet.

I need a blender blend file for fire animation.

Accurately detected inability to generate Blender Blend file for a fire animation because it is a text-based AI lacking ability.

Additional Resources

If you are interested in more about Python or AI development, check out these resources:

- Book: Learning Python

- Course: Learning Python

If you need one-on-one assistance or want me to help you install LibreOffice or Llama.cpp, I am available for personal tutorials and service requests:

- One-on-one Python tutorials: Contact Me

- LibreOffice + Llama.cpp installation or migration: Contact Services

By adding these code snippets and step-by-step instructions, beginners can follow along more easily and get a working example running without missing critical steps.

Disclosure: Some of the links above are referral (affiliate) links. I may earn a commission if you purchase through them - at no extra cost to you.