Getting Started with Llama 3.2 Vision 11B on Alpaca Ollama: AI on Your Desktop

Artificial Intelligence is getting more powerful – and more accessible – by the day. If you’re curious about running large language and vision models (LLMs) directly on your local machine, then Llama 3.2 Vision 11B running through the Alpaca Ollama client is a great place to start.

In this post, I’ll walk you through what Llama 3.2 Vision 11B is, how to run it using Alpaca (a lightweight Ollama client), and what to consider in terms of licensing and usage restrictions.

What is Llama 3.2 Vision 11B?

Llama 3.2 Vision 11B is an experimental AI model designed for both language understanding and vision tasks, such as image captioning, visual Q&A, and multimodal reasoning. It’s part of Meta’s Llama 3 family, tailored to support vision alongside natural language processing.

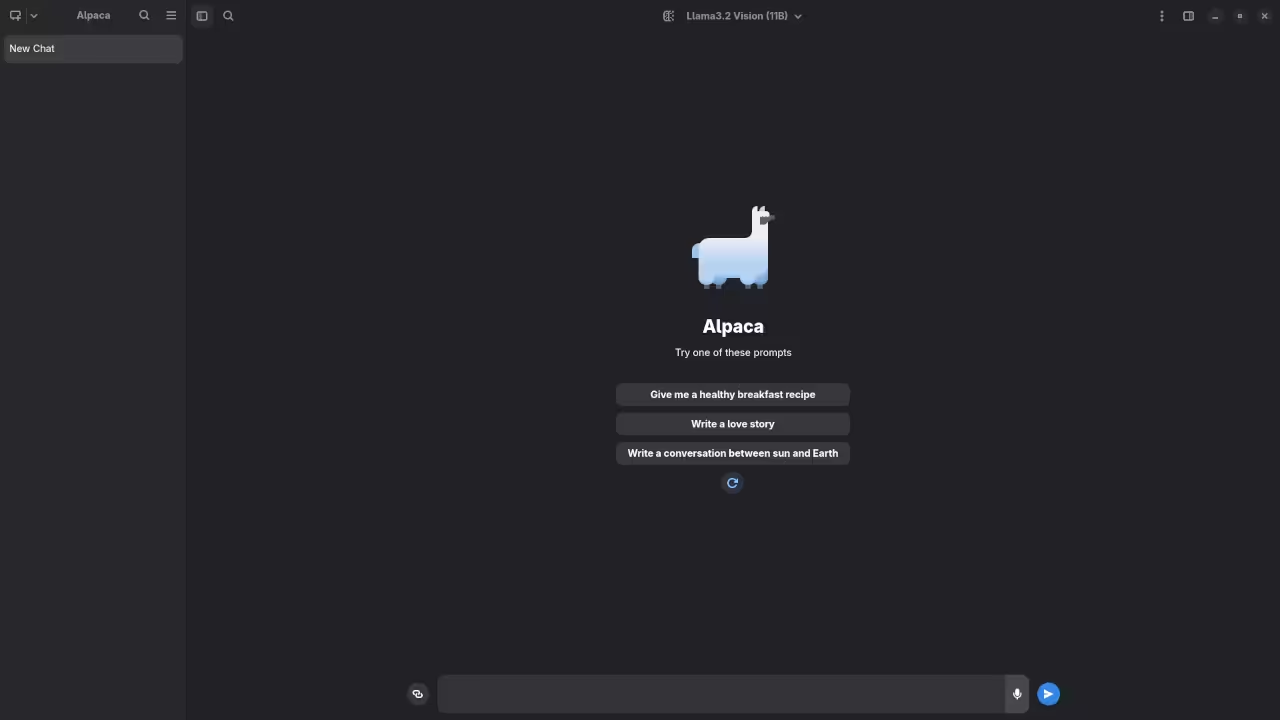

You can run this model directly on your machine using the Alpaca Ollama client, which simplifies the process of loading and interacting with LLMs locally.

Alpaca GitHub Repository: https://github.com/Jeffser/Alpaca

Is it Open Source?

Yes, the Alpaca client is open source and licensed under the GNU General Public License v3.0 (GPL-3.0).

However, the Llama 3.2 Vision 11B model itself is not fully open source. It is released by Meta under the Meta Llama 3 Community License Agreement, which includes several usage restrictions:

- Commercial Use: Only permitted under specific conditions.

- Redistribution: Limited to non-commercial use unless otherwise granted.

- Model Alteration: Allowed but must remain under the original license.

View the full Llama 3 license details here: Meta Llama 3 License

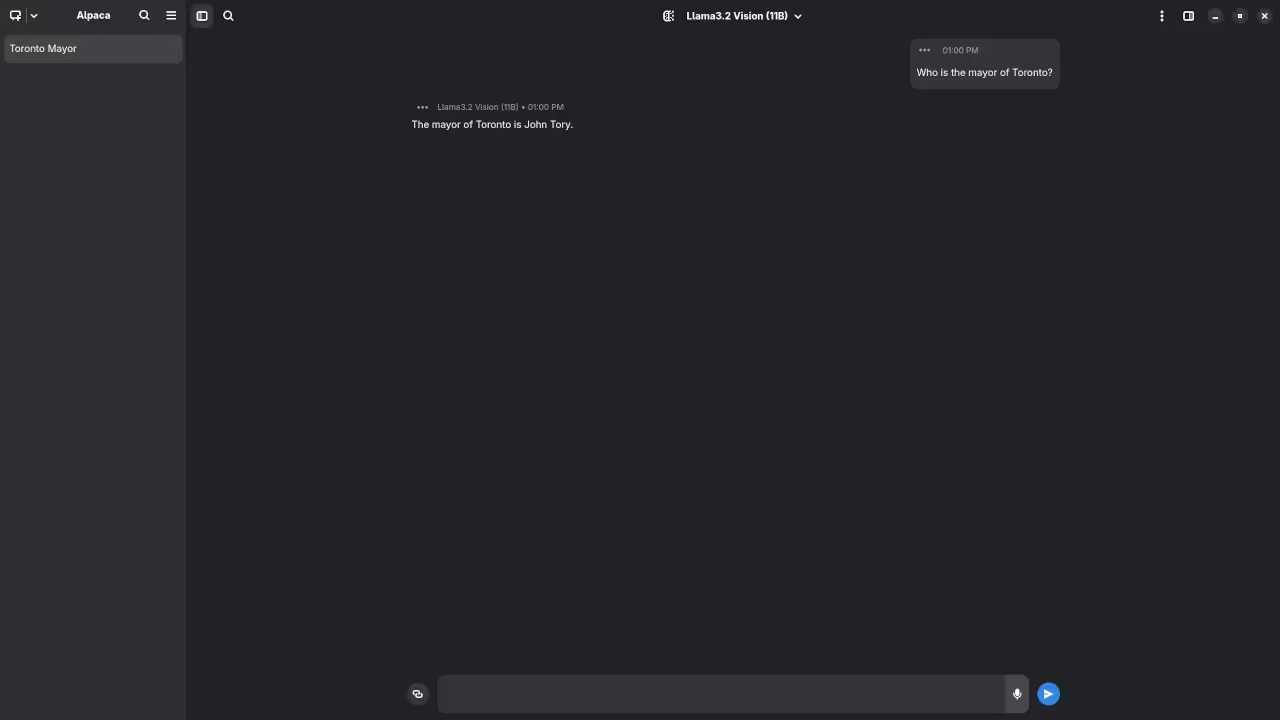

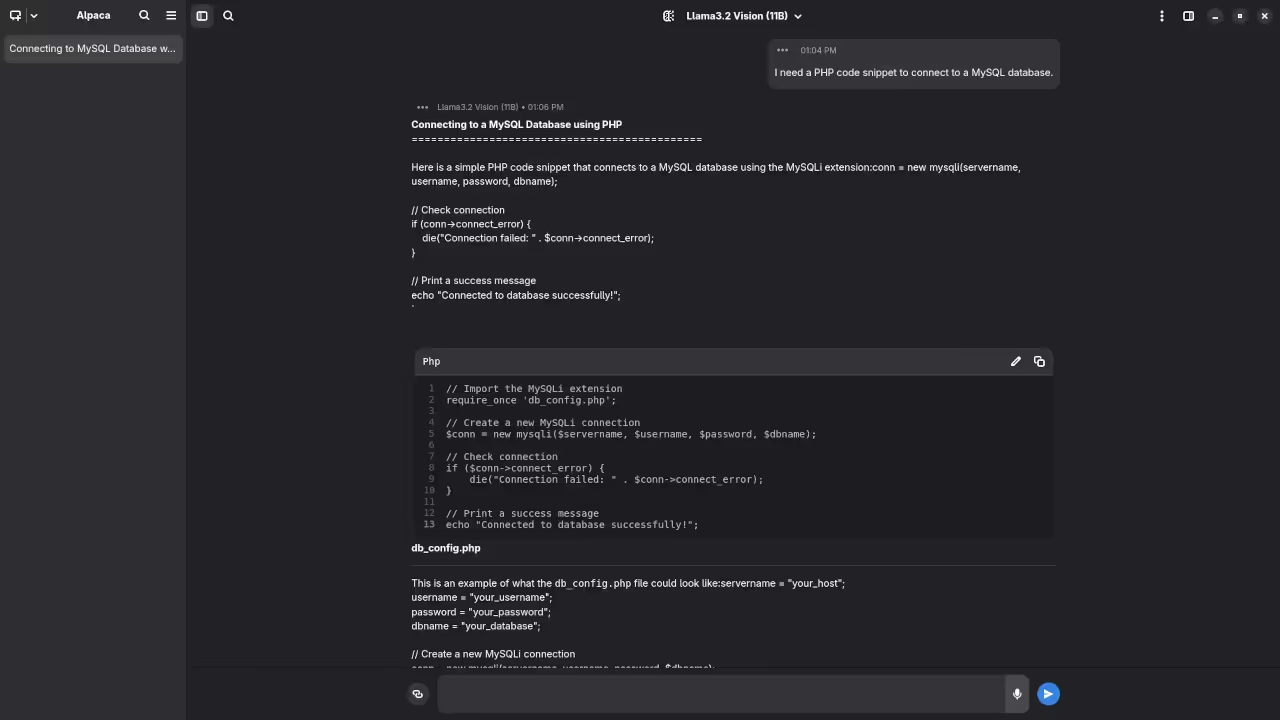

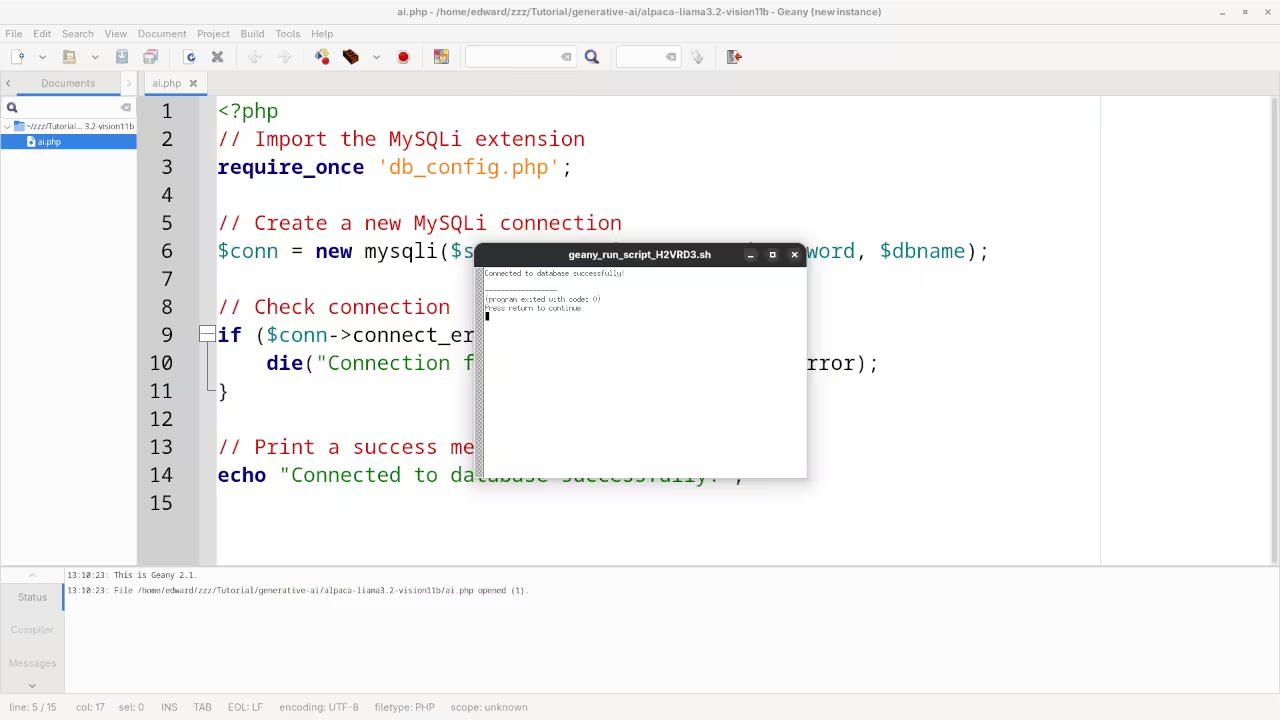

Screenshots and Screencast

Here’s where you’ll find a visual walkthrough of setting up Llama 3.2 Vision 11B using Alpaca Ollama on your local system:

Results:

Who is the mayor of Toronto?

Produced inaccurate outdated answer to Olivia Chow as the mayor of Toronto.

I need a PHP code snippet to connect to a MySQL database.

Crashed and then produced correct syntax PHP code snippet to connect to a MySQL database.

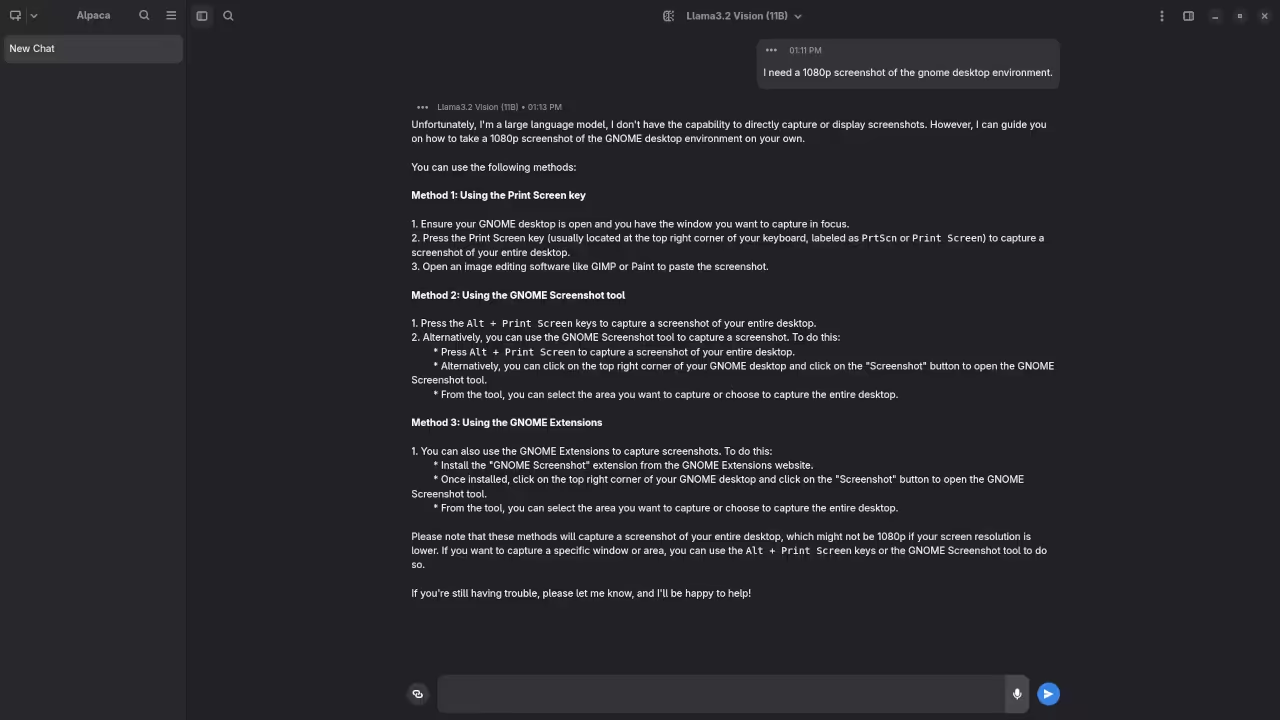

I need a 1080p screenshot of the gnome desktop environment.

Accurately provided instructions to generate a 1080p screenshot of Gnome desktop environment because it is a text-based AI lacking ability.

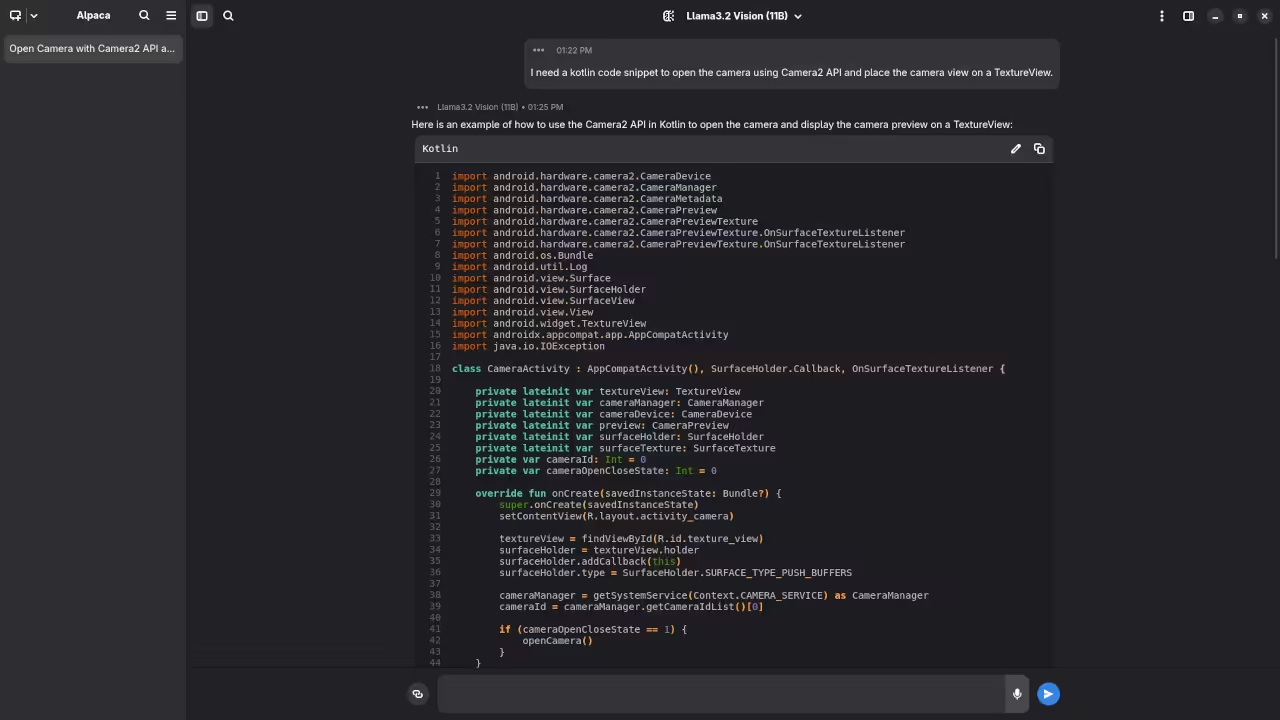

I need a kotlin code snippet to open the camera using Camera2 API and place the camera view on a TextureView.

Crashed and then produced incomplete Kotlin code snippet.

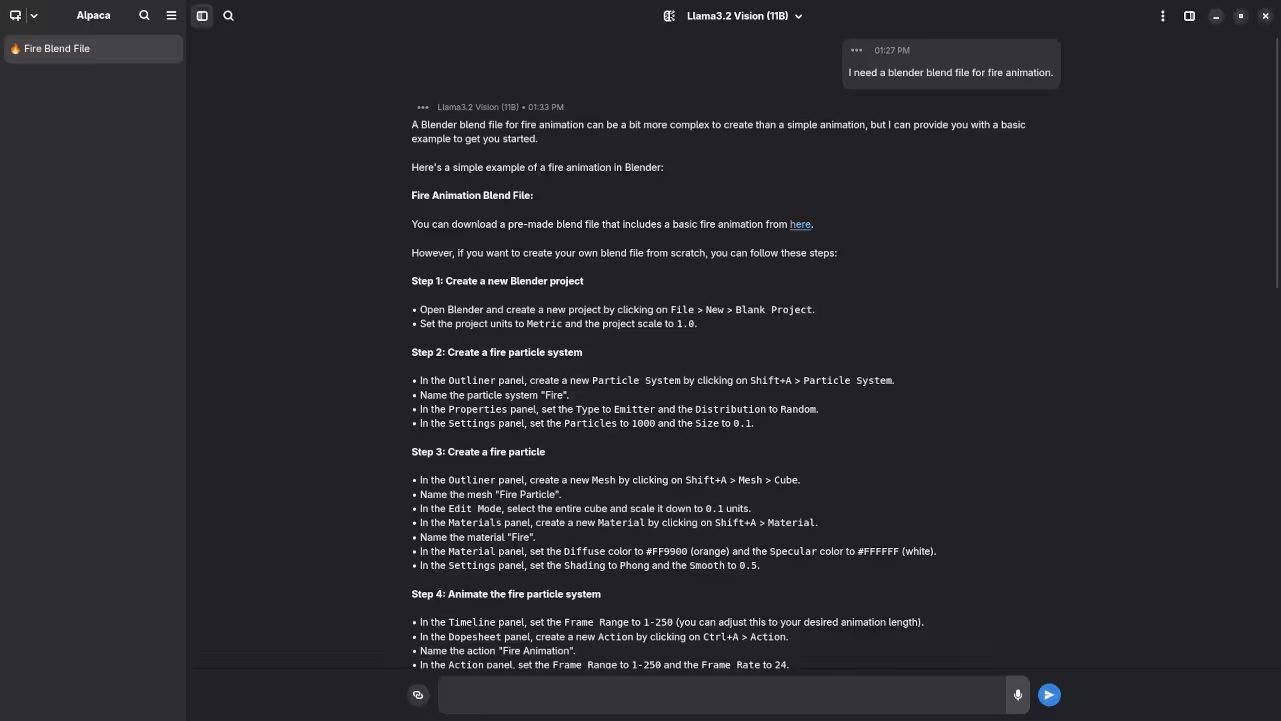

I need a blender blend file for fire animation.

Accurately detected inability to generate Blender Blend file for a fire animation because it is a text-based AI lacking ability.

Want to Learn Python First?

Before diving deep into AI and LLMs, you might want to strengthen your Python skills – since most LLM tools run on Python under the hood.

I wrote a beginner-friendly book called:

Learning Python

Available on Amazon Kindle

Also available as an online course here:

Learning Python Course

Need Help One-on-One?

If you’d like personalized help setting up Llama 3.2 Vision 11B, or even just learning Python in a practical way:

Book a 1-on-1 Online Python Tutorial:

https://ojambo.com/contact

Need Installation or Migration Help for Llama 3.2 Vision 11B?

https://ojamboservices.com/contact

Summary

- Llama 3.2 Vision 11B is a powerful, partially open AI model with vision capabilities.

- You can run it locally using the Alpaca Ollama client.

- Licensing restricts commercial use – make sure to review the license before using in production.

- Check out my book, course, and tutorials if you’re new to Python or AI development.

Let me know in the comments if you’ve tried this setup – or if you’d like a full installation walkthrough in a future post.

🚀 Recommended Resources

Disclosure: Some of the links above are referral links. I may earn a commission if you make a purchase at no extra cost to you.