How to Run Phi 2.7B Locally Using Alpaca (Ollama Client)

If you’re interested in experimenting with powerful AI models on your own machine, Phi 2.7B is a great starting point. In this post, I’ll walk you through how to run Phi 2.7B, a small but capable large language model (LLM), using the Alpaca Ollama client, available on GitHub:

🔗 Alpaca by Jeffser on GitHub

Phi 2.7B is developed by Microsoft and designed for code generation and general language tasks, making it especially suitable for learning and experimentation. This guide is ideal for beginners who want to set up an LLM locally without complex configuration.

⚡ What is Phi 2.7B?

Phi 2.7B is a lightweight LLM trained with instruction tuning and optimized for reasoning and coding tasks. It offers:

- Fast performance on consumer-grade GPUs or CPUs

- A focus on helpful, harmless, and honest output

- Impressive accuracy for its small size

It is also open source, available on platforms like Hugging Face.

🔒 License and Use Cases for Phi 2.7B

Phi 2.7B is part of Microsoft’s Phi family of Small Language Models (SLMs), built for on-device AI applications without requiring cloud access. These models are designed to be:

- Compact enough to run on consumer hardware

- Efficient for edge computing and offline scenarios

- Accessible to developers and researchers

Open Source:

Phi 2.7B is released under the MIT License, which allows:

- Commercial and non-commercial use

- Modification, distribution, and private use

- No warranty, liability, or restriction on redistribution

You can view the license terms directly in the GitHub or Hugging Face model card.

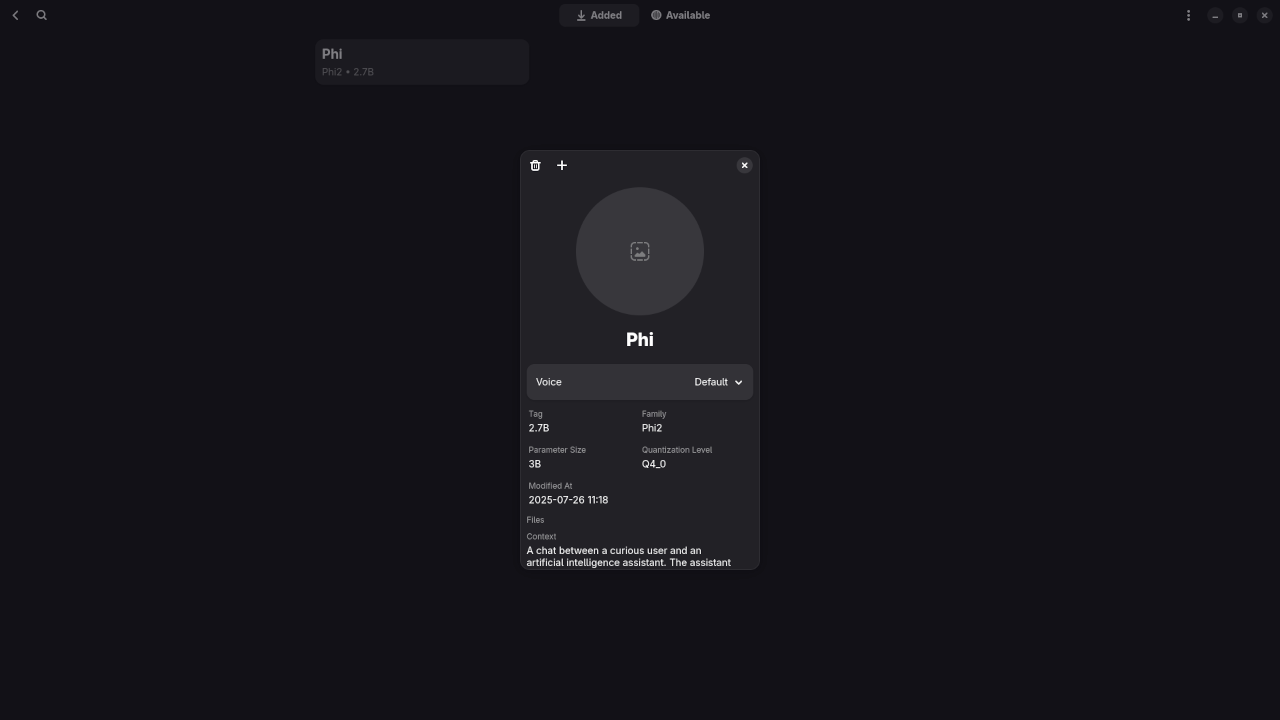

🔧 Installing and Running Phi 2.7B with Alpaca (Ollama)

The Alpaca client (not to be confused with the Stanford version) is a wrapper for running models in the Ollama format, which simplifies local LLM deployment.

Basic Steps:

- Download and Install the Alpaca Ollama Client:

Visit the Alpaca GitHub page and follow the installation instructions. - Run Phi 2.7B:

Follow the README instructions in the repo to launch the model locally.

Screenshots and Screencast

Results:

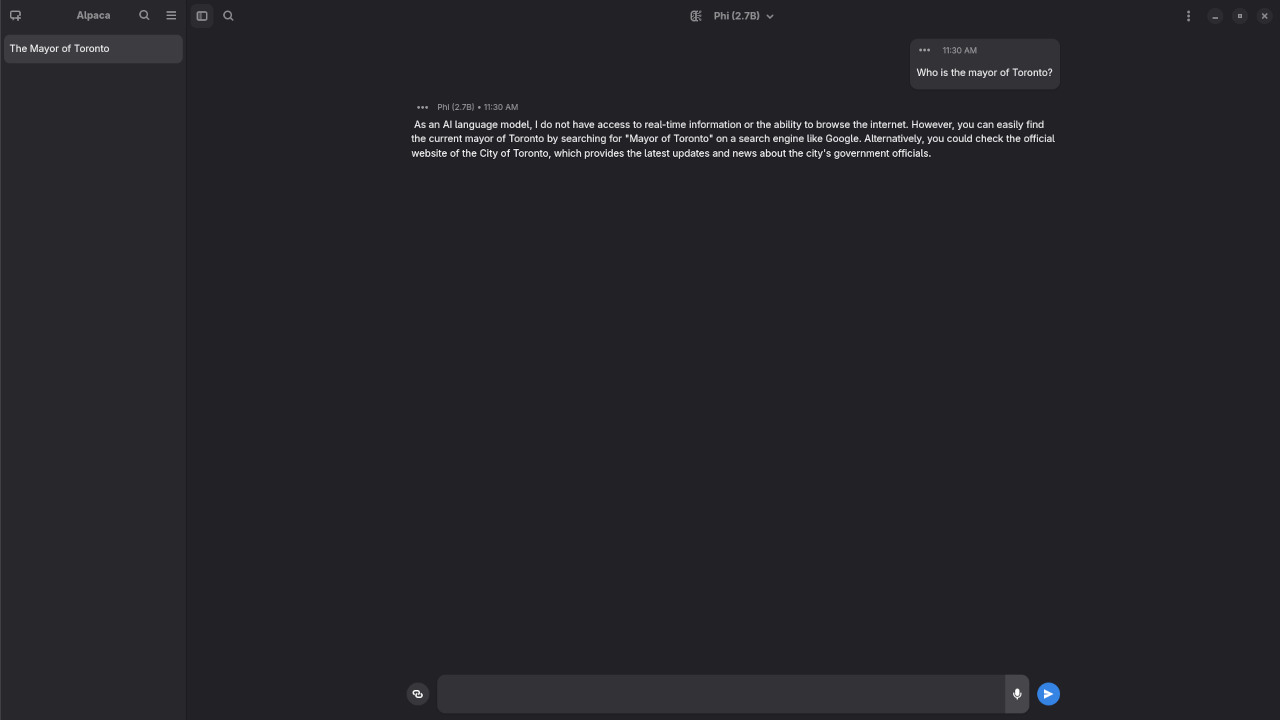

Who is the mayor of Toronto?

Produced inaccurate outdated answer to Olivia Chow as the mayor of Toronto.

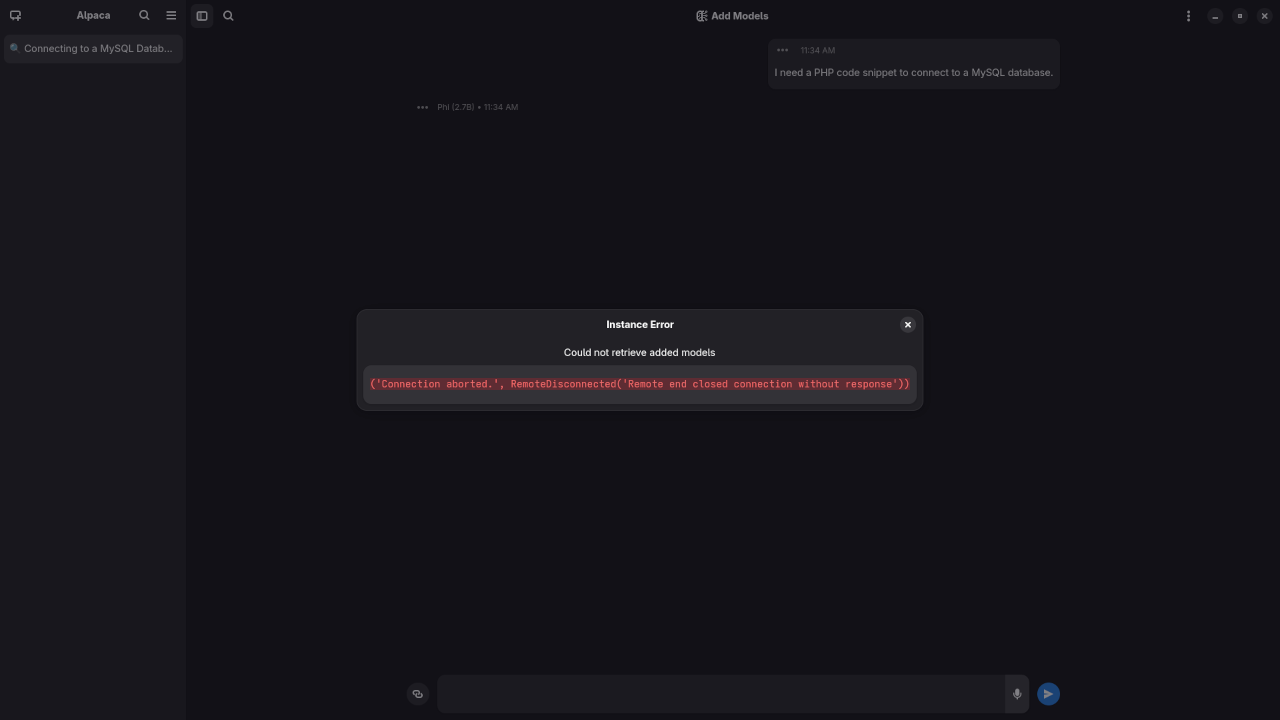

I need a PHP code snippet to connect to a MySQL database.

Crashed and could not produce PHP code snippet to connect to a MySQL database.

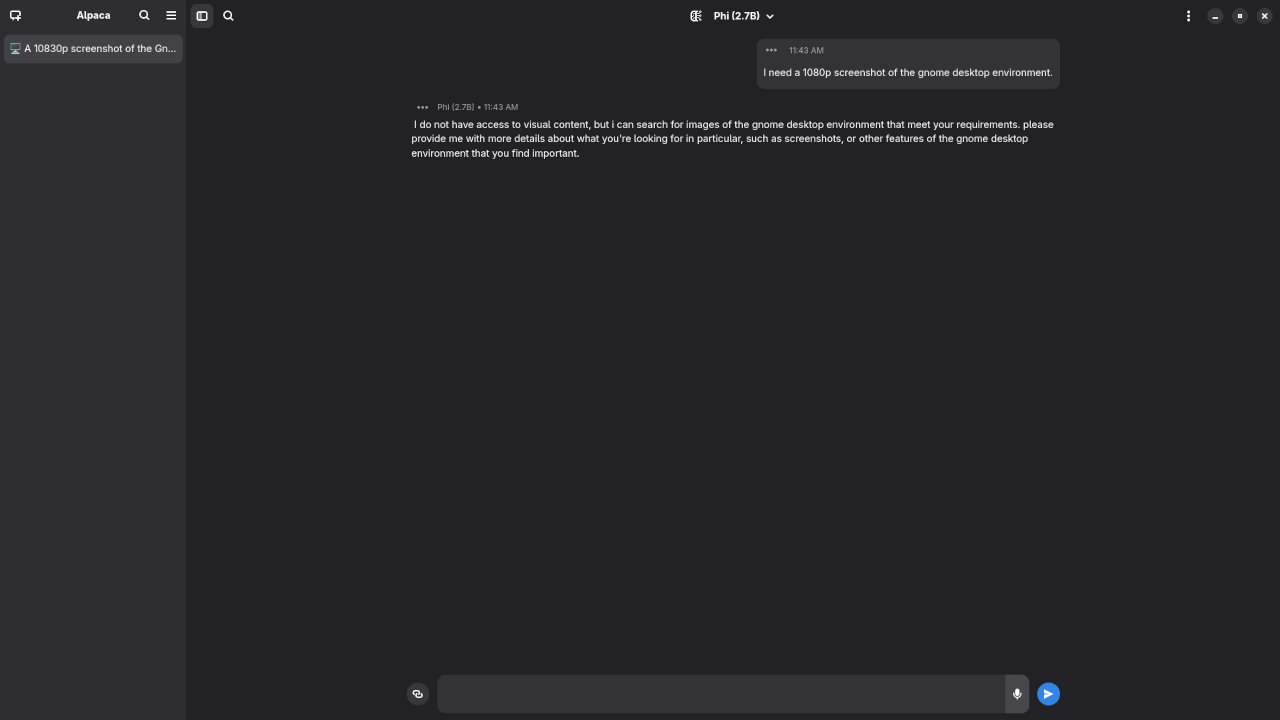

I need a 1080p screenshot of the gnome desktop environment.

Produced good answer to generate a 1080p screenshot of Gnome desktop environment because it is a text-based AI lacking ability.

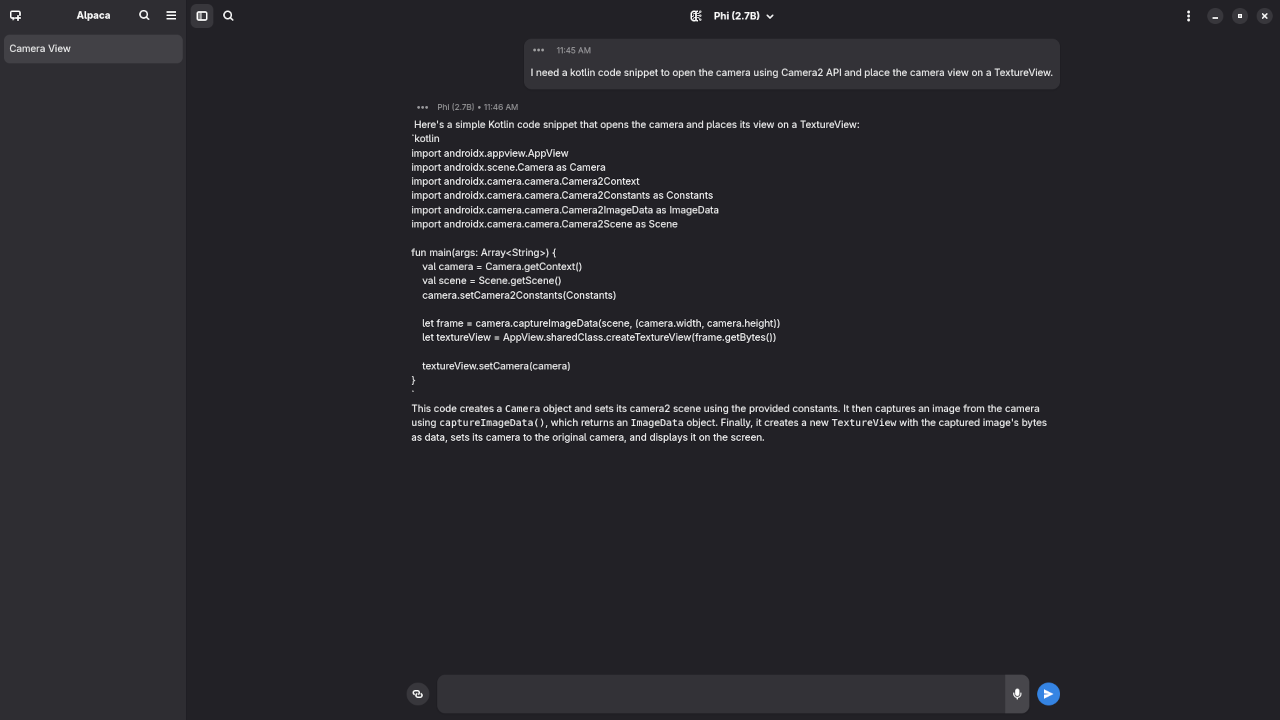

I need a kotlin code snippet to open the camera using Camera2 API and place the camera view on a TextureView.

Produced incomplete Kotlin code snippet.

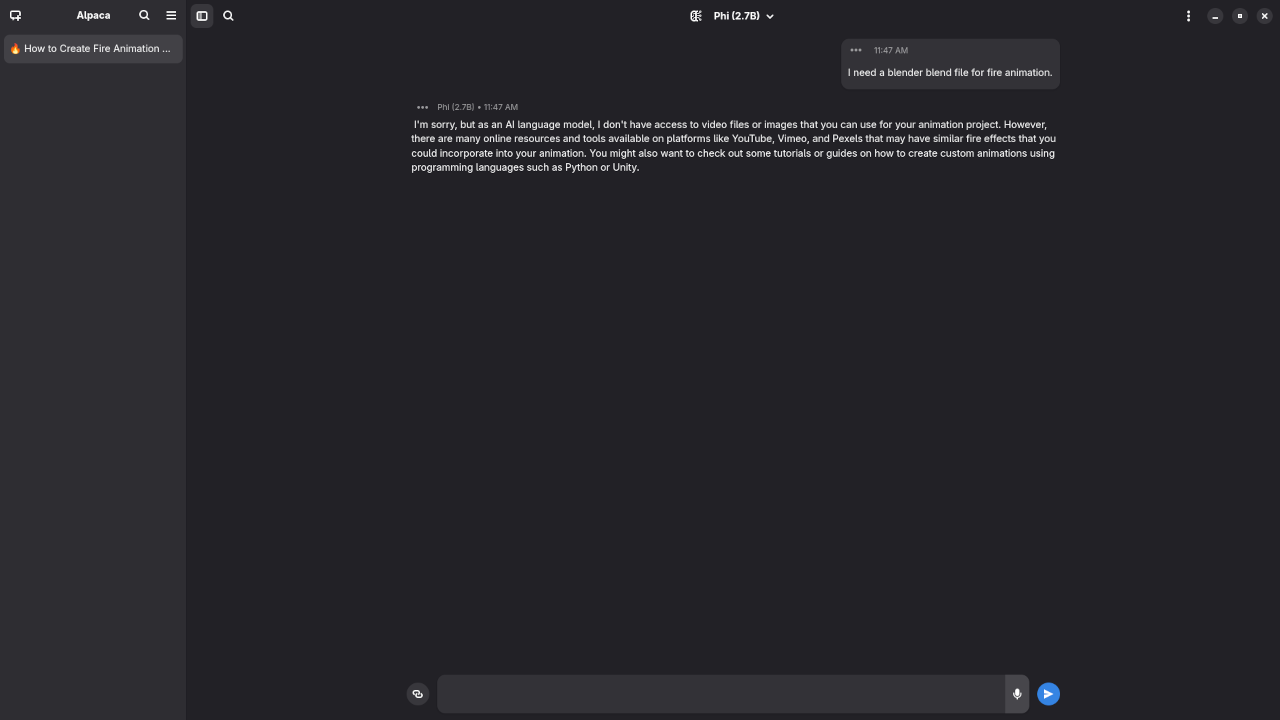

I need a blender blend file for fire animation.

Apologized about being unable to access video or image to use in the animation request.

📚 Want to Learn Python?

If you’re just getting started with coding or want to improve your Python skills, check out my resources:

👨🏫 Need Help? Book a 1-on-1 Tutorial

I offer online Python tutoring to help you understand coding concepts or get unstuck with projects.

🛠️ Need Installation Help?

If you’d like help installing Phi 2.7B or migrating to a new machine, I offer setup services:

Let me know in the comments if you have questions or run into issues. Happy coding! 🐍💻

🚀 Recommended Resources

Disclosure: Some of the links above are referral links. I may earn a commission if you make a purchase at no extra cost to you.