Tag: ollama client

-

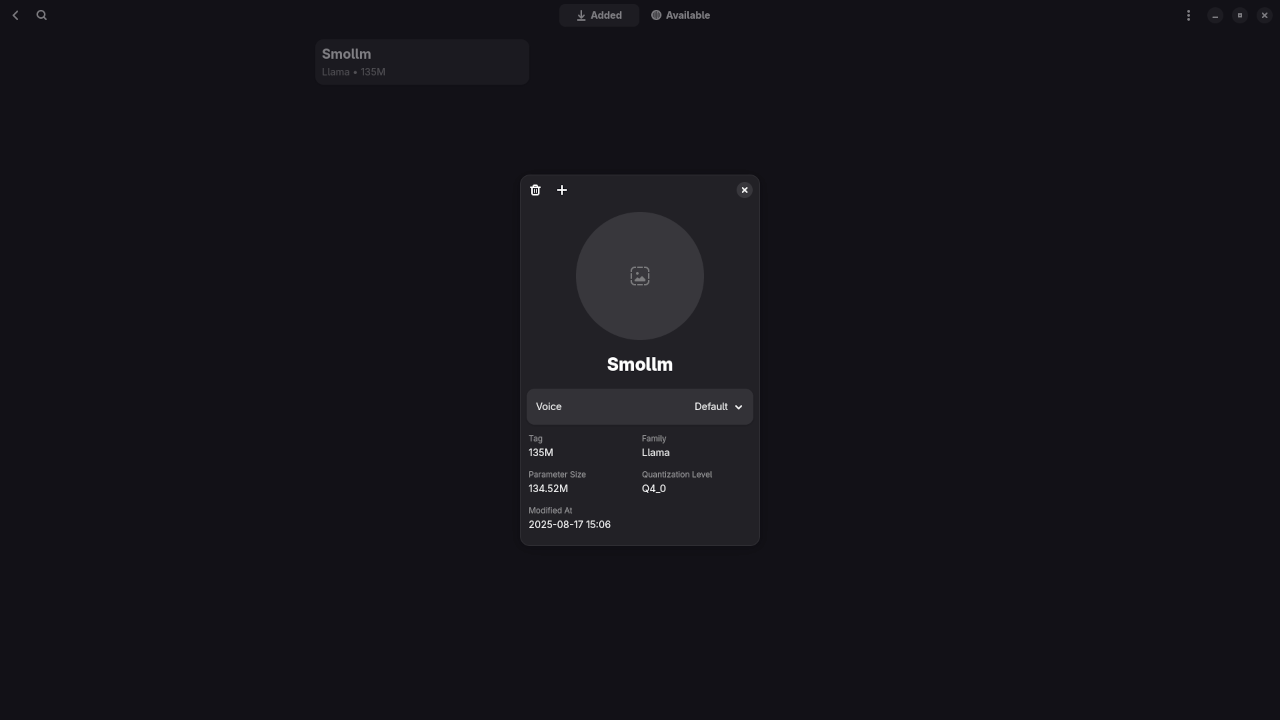

Review Generative AI Smollm 135M Model

Getting Started with Smollm 135M LLM on Alpaca Ollama Client (Open Source Guide) If you’re curious about running large language models (LLMs) locally and efficiently, this guide will walk you through setting up Smollm 135M with the Alpaca Ollama client.

Written by

-

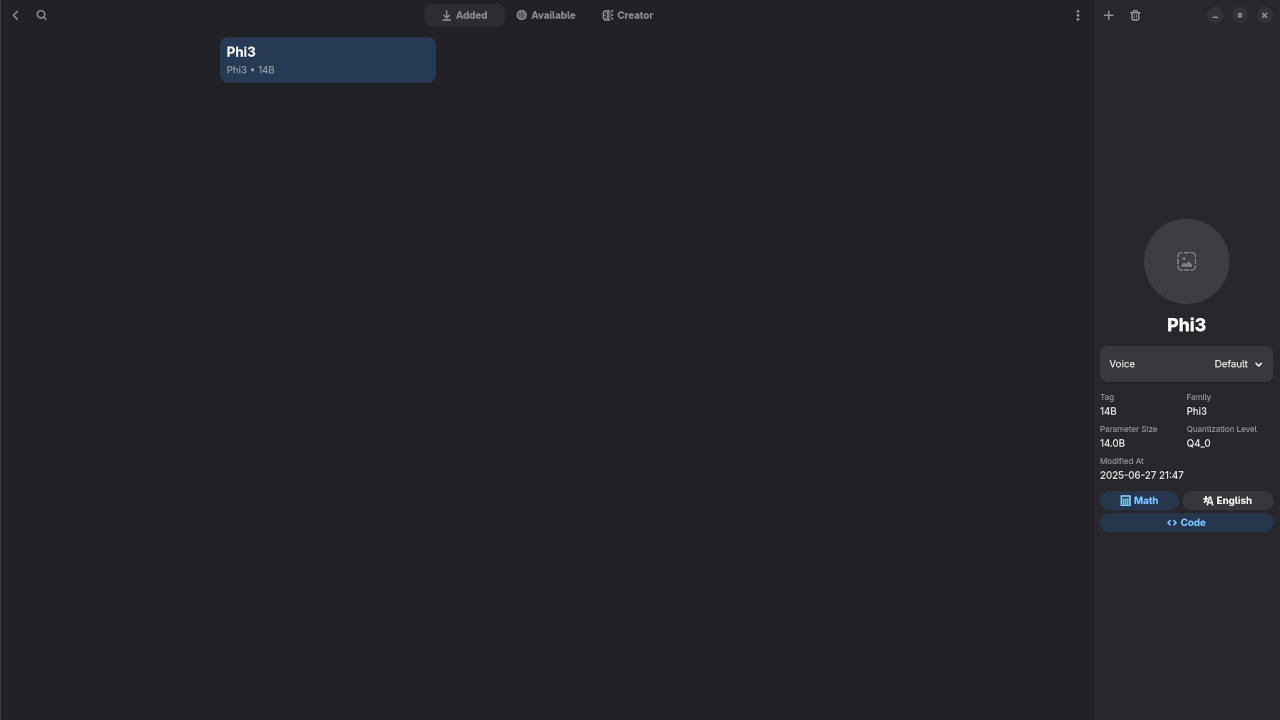

Review Generative AI Phi3 14B Model

🔍 Reviewing Phi-3 LLM on Linux Using Alpaca & Ollama In this article, I’ll walk you through my experience using Phi-3, a lightweight large language model (LLM) developed by Microsoft, running locally on Linux via Alpaca, a user-friendly front-end for Ollama packaged conveniently as a Flatpak.

Written by