If you’re looking to explore the exciting world of Large Language Models (LLMs) and containerization, you’re in the right place! In this post, we’ll walk you through how to run TinyLlama 1.1B, a lightweight yet powerful conversational AI model, in a Podman Compose container. This guide is perfect for beginners who want to experiment with AI technologies, especially those familiar with containerization but new to LLMs.

TinyLlama 1.1B is an open-source language model developed by Hugging Face. With its compact size, it can generate high-quality text responses while being lightweight enough to run in resource-constrained environments. Running it in a Podman Compose container allows you to isolate the environment, ensuring that your setup is reproducible and easy to manage.

Why Use Podman Compose for TinyLlama?

Containerization is one of the best ways to run complex applications like TinyLlama 1.1B. Using Podman (a daemonless container engine) instead of Docker allows you to work without needing root privileges, making it an excellent choice for developers and hobbyists alike. By combining Podman with Compose, you can define and manage multi-container environments in a simple YAML file, ensuring an easy and consistent setup.

Installation: How to Set Up TinyLlama 1.1B in a Podman Compose Container

Step 1: Install Podman and Podman Compose

Before we can run TinyLlama, make sure you have Podman and Podman Compose installed on your system. If you’re using Debian-based Linux, you can install them using the following commands:

sudo apt update

sudo apt install -y podman podman-composeIf you’re using MacOS or Windows, follow the installation instructions for your platform on the Podman website.

Step 2: Create a Podman Compose Configuration File

Once Podman is installed, you need to define your environment using a Podman Compose file. This file will specify the configuration for the TinyLlama container.

Create a folder for your project and inside it, create a file named docker-compose.yml (Podman Compose is compatible with Docker Compose files).

Here is an example docker-compose.yml for running TinyLlama:

version: '3.8'

services:

tinyllama:

image: huggingface/tiny-llama:latest

container_name: tinyllama

ports:

- "5000:5000"

environment:

- MODEL_NAME=TinyLlama-1.1B-Chat-v1.0

volumes:

- ./model:/app/model

restart: unless-stoppedThis configuration will pull the TinyLlama image from Hugging Face and run it inside a container, exposing port 5000 for interacting with the model. You can customize the MODEL_NAME if you’re using a different model or need to adjust any other settings.

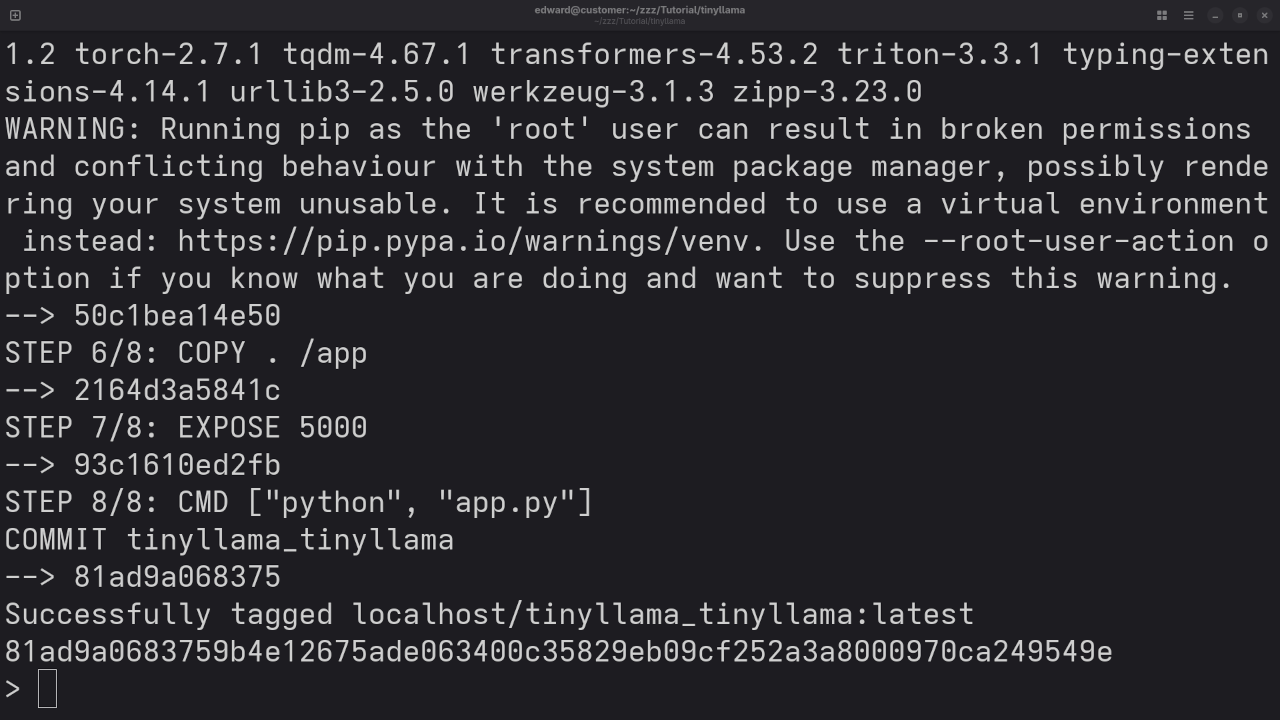

Step 3: Running the Container

Now, you’re ready to start TinyLlama using Podman Compose.

- Open your terminal and navigate to the folder where your

docker-compose.ymlis located. - Run the following command to start the container:

podman-compose upThis will download the required images, set up the container, and start the TinyLlama model. The model will be accessible via http://localhost:5000 (you can interact with it using a REST API or HTTP client).

Step 4: Access TinyLlama

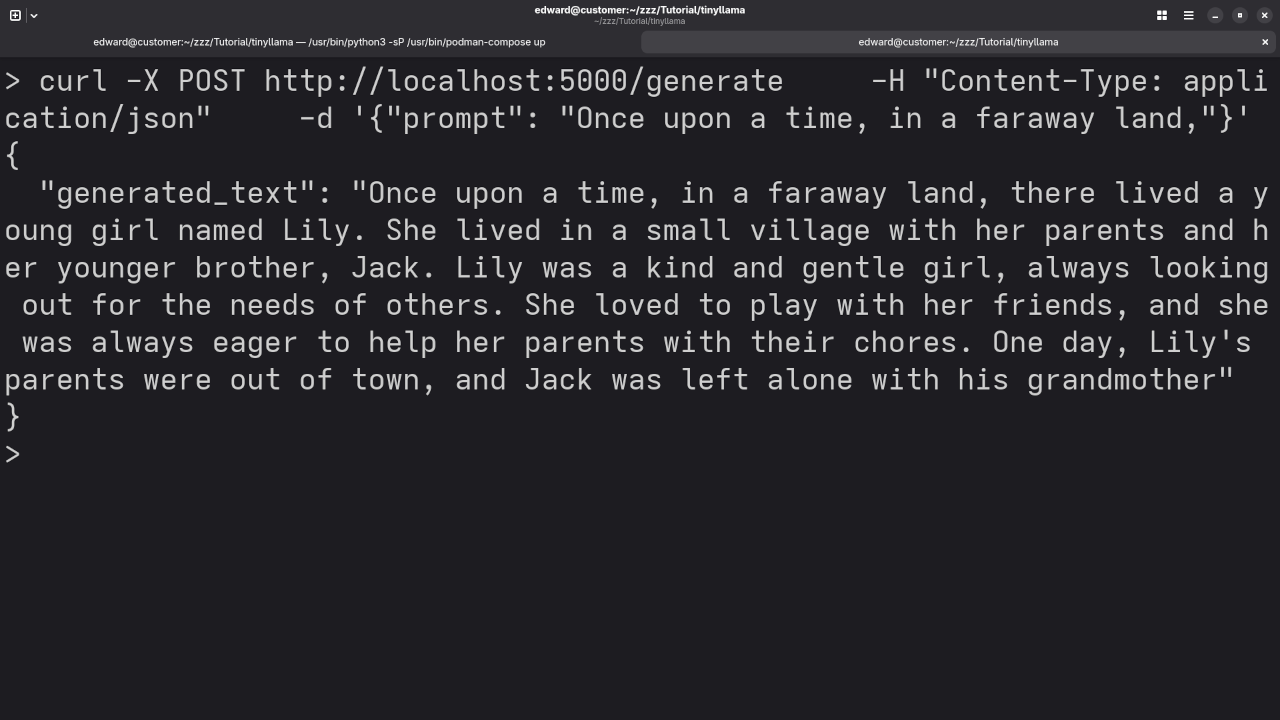

Once the container is running, you can interact with TinyLlama by sending POST requests to the exposed endpoint (http://localhost:5000). You can use curl or a tool like Postman to send your queries.

Example Usage of TinyLlama API:

Once the container is running, you can send a request to interact with TinyLlama. Here’s an example of using curl to send a question:

curl -X POST http://localhost:5000/answer \

-H "Content-Type: application/json" \

-d '{"question": "What is the capital of France?"}'You should receive a response like this:

{

"answer": "Paris"

}Screenshots & Live Demonstration

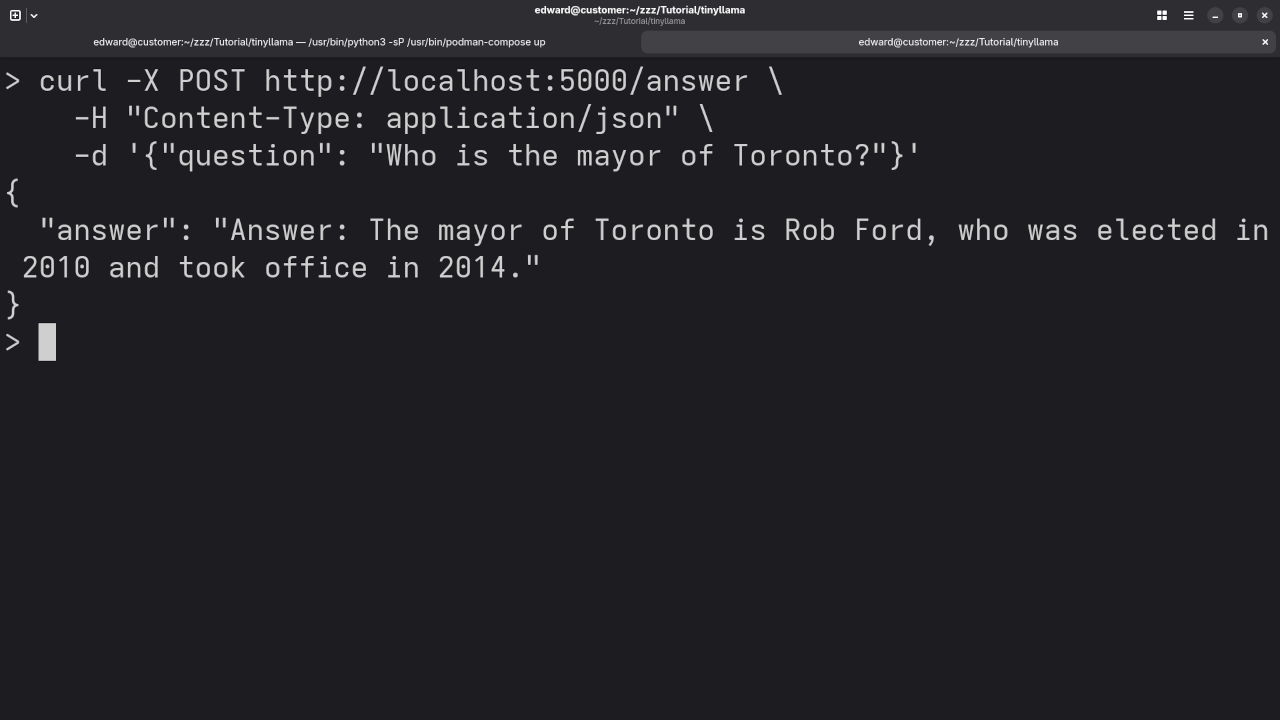

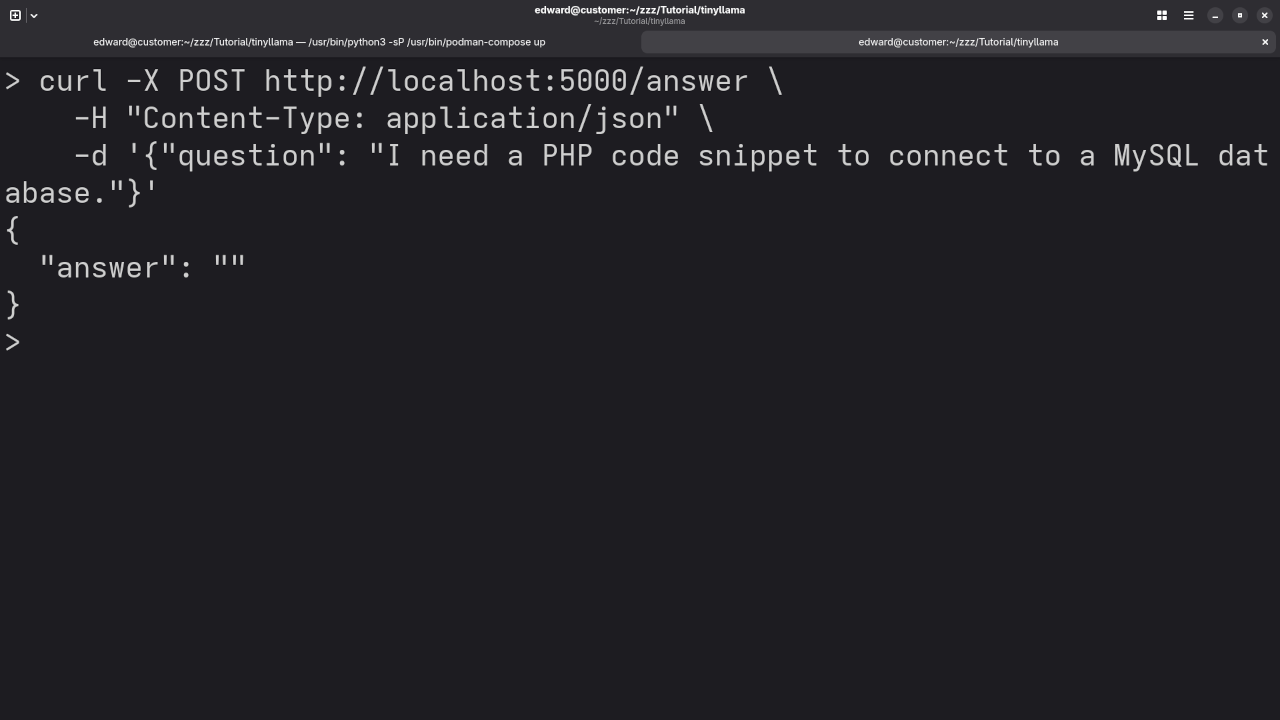

Here’s where you can add screenshots of the installation process and the terminal output when running Podman Compose. These images will give readers a visual guide on how to set things up and troubleshoot any potential issues.

Additionally, I’ve embedded a YouTube screencast below, where I demonstrate the installation and usage of TinyLlama in a Podman Compose container.

Results:

Who is the mayor of Toronto?

Produced inaccurate outdated answer to Olivia Chow as the mayor of Toronto.

I need a PHP code snippet to connect to a MySQL database.

Did not provide an answer.

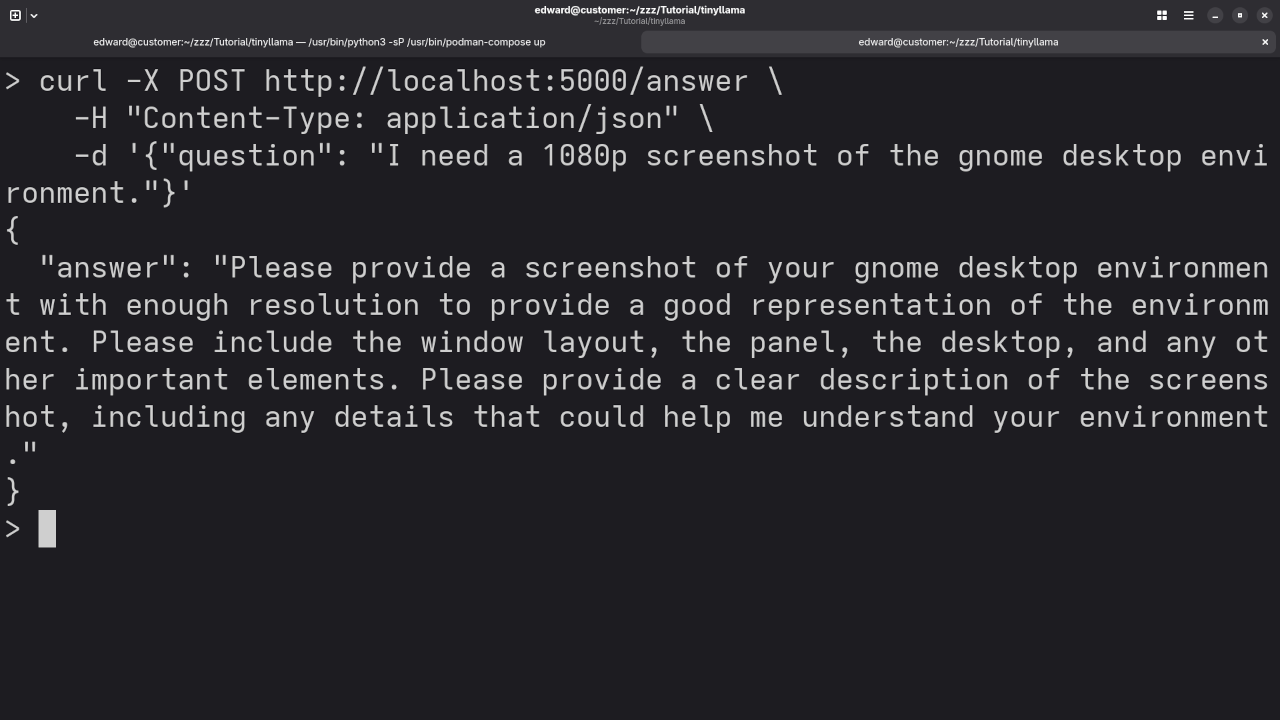

I need a 1080p screenshot of the gnome desktop environment.

Requested a screenshot of the gnome desktop environment.

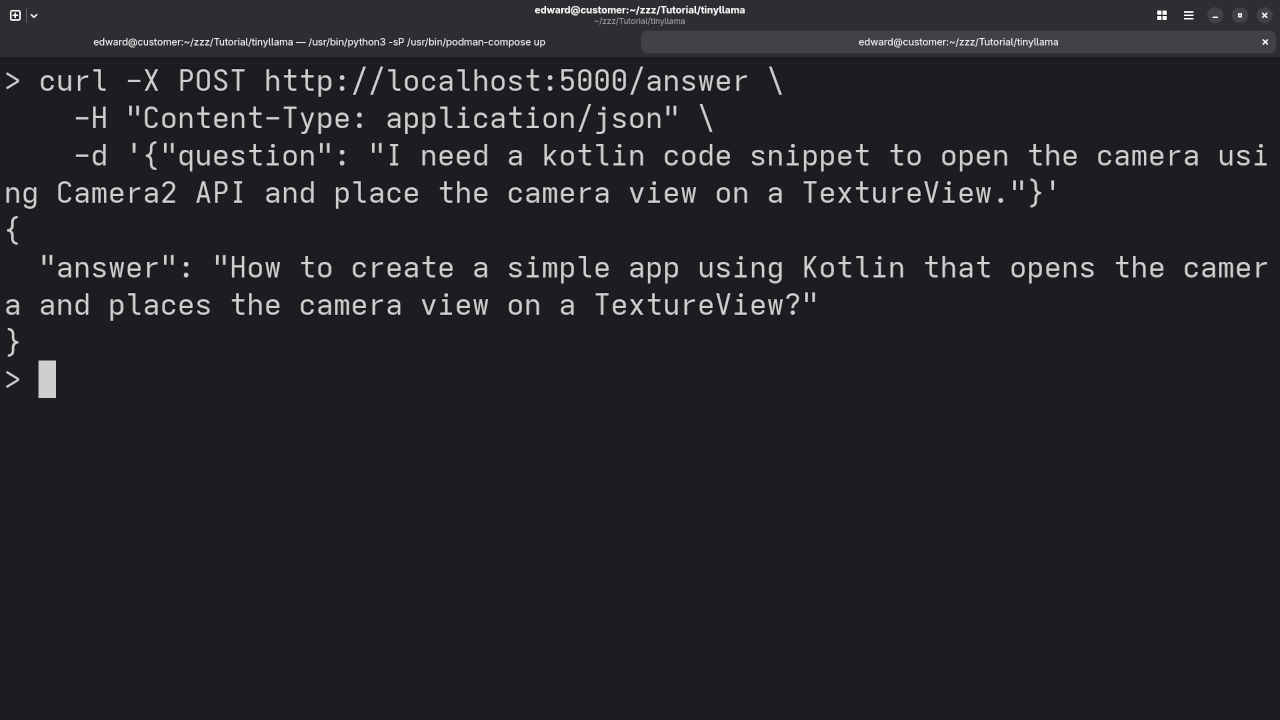

I need a kotlin code snippet to open the camera using Camera2 API and place the camera view on a TextureView.

Asked a question about the request.

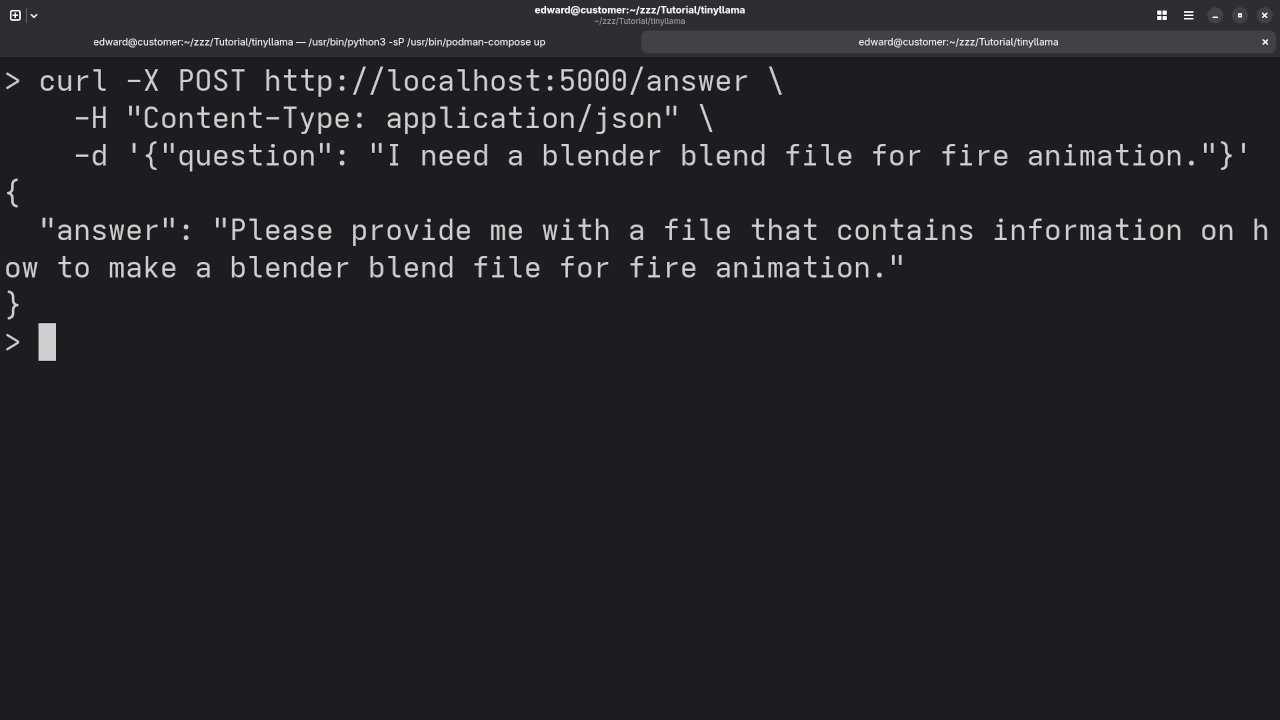

I need a blender blend file for fire animation.

Requested a file containing information on how to make the request.

Why Open-Source Matters

TinyLlama 1.1B is not only a powerful AI model, but it’s also open-source, meaning anyone can use, modify, and contribute to the project. You can find the source code on Hugging Face and other repositories, enabling developers and AI enthusiasts to tweak the model according to their needs.

Open-source AI models like TinyLlama encourage innovation and democratize access to cutting-edge technology. It’s a fantastic resource for developers, businesses, and hobbyists looking to explore conversational AI.

Learn More and Get Help

If you’re new to Python and containerization or need further assistance setting up TinyLlama, feel free to check out my resources:

- Book: Learning Python — A guide for Python beginners that will help you understand the basics and advance your coding skills.

- Course: Learning Python Course — A detailed course that teaches Python programming from scratch, perfect for beginners.

- One-on-One Python Tutorials: If you need personalized support or have questions, I offer one-on-one online Python tutorials. Contact me here.

- TinyLlama Services: I can also install or migrate TinyLlama for you. Reach out for services.

Conclusion

TinyLlama 1.1B, with its small size and powerful capabilities, is a great choice for anyone interested in running LLMs in containers. By using Podman Compose, you can easily manage and scale your AI projects in a flexible and isolated environment. I hope this guide has helped you get started. Feel free to reach out if you need further assistance!

Disclosure: Some of the links above are referral (affiliate) links. I may earn a commission if you purchase through them - at no extra cost to you.