How to Run OpenAI Whisper Tiny English Model with Podman Compose (Beginner Guide)

💡 Introduction

OpenAI Whisper is a powerful open-source speech recognition system that can transcribe spoken audio into text. It supports multiple models ranging from small and fast to large and highly accurate.

In this post, you’ll learn how to run the “tiny.en” model (optimized for English) in a Podman Compose container, and integrate it with a simple web interface that records audio and returns the transcription.

🛣 Why Use Podman Compose?

Podman is a daemonless container engine that provides a Docker-compatible experience with better security. Podman Compose helps orchestrate containers the same way Docker Compose does—but without requiring root access.

🔬 What is Whisper Tiny English?

- ✔ Open-source under MIT license

- ✔ Very small (~75MB)

- ✔ Fast inference even on modest CPUs

- ✔ Trained specifically for English

- ✔ Great for quick demos and lightweight deployments

🛠 Example Setup: Voice Recording + Whisper Transcription (Tiny English)

This example creates a container that uses Whisper’s tiny English model to transcribe speech from a browser-based recorder.

✅ Prerequisites:

- Podman and Podman Compose installed

- Python 3.9+ installed

- Git installed

1. Create a Dockerfile:

FROM python:3.9

RUN pip install --no-cache-dir openai-whisper flask \

&& python -c "import whisper; whisper.load_model('tiny.en')"

WORKDIR /app

COPY . /app

CMD ["python", "app.py"]

2. Create podman-compose.yml:

version: "3"

services:

whisper:

build: .

volumes:

- .:/app

- whisper-model-cache:/root/.cache/whisper

ports:

- "5000:5000"

volumes:

whisper-model-cache:

3. Create the transcription server (app.py):

from flask import Flask, request, jsonify

import whisper

import tempfile

app = Flask(__name__)

model = whisper.load_model("tiny.en") # English only

@app.route("/transcribe", methods=["POST"])

def transcribe():

file = request.files["audio"]

with tempfile.NamedTemporaryFile(delete=False, suffix=".mp3") as tmp:

file.save(tmp.name)

result = model.transcribe(tmp.name)

return jsonify(result)

if __name__ == "__main__":

app.run(host="0.0.0.0", port=5000)

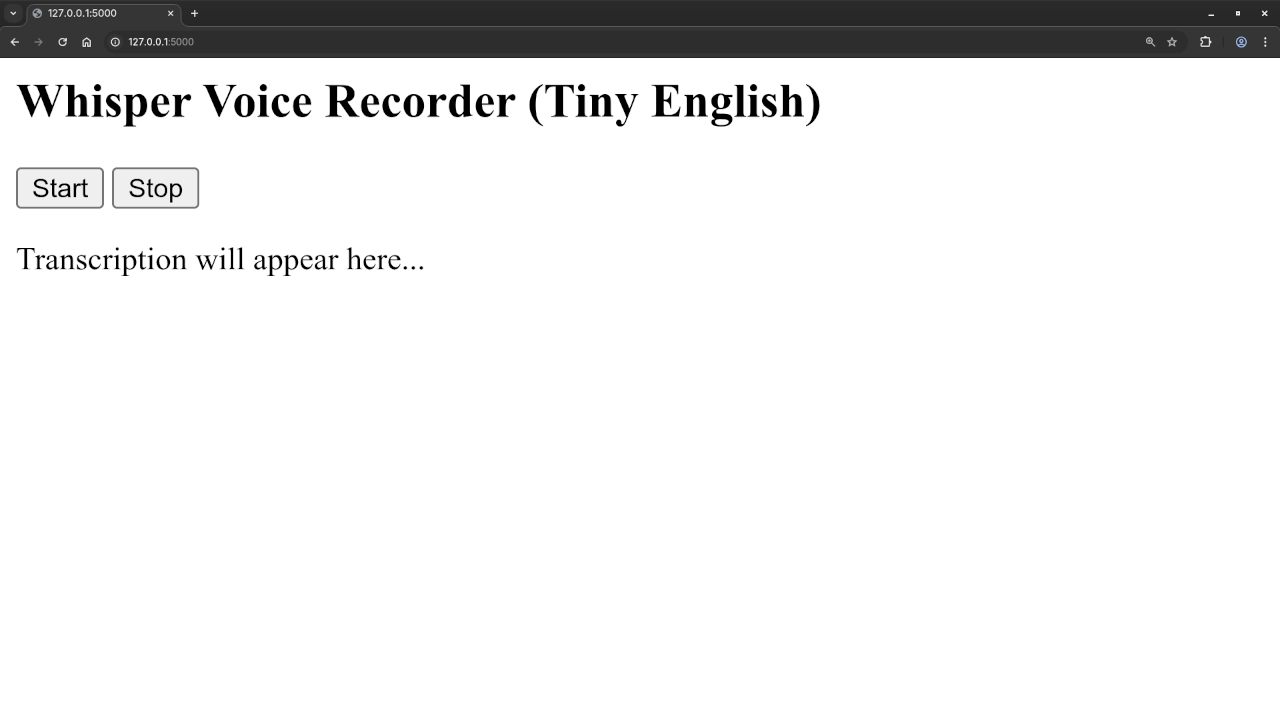

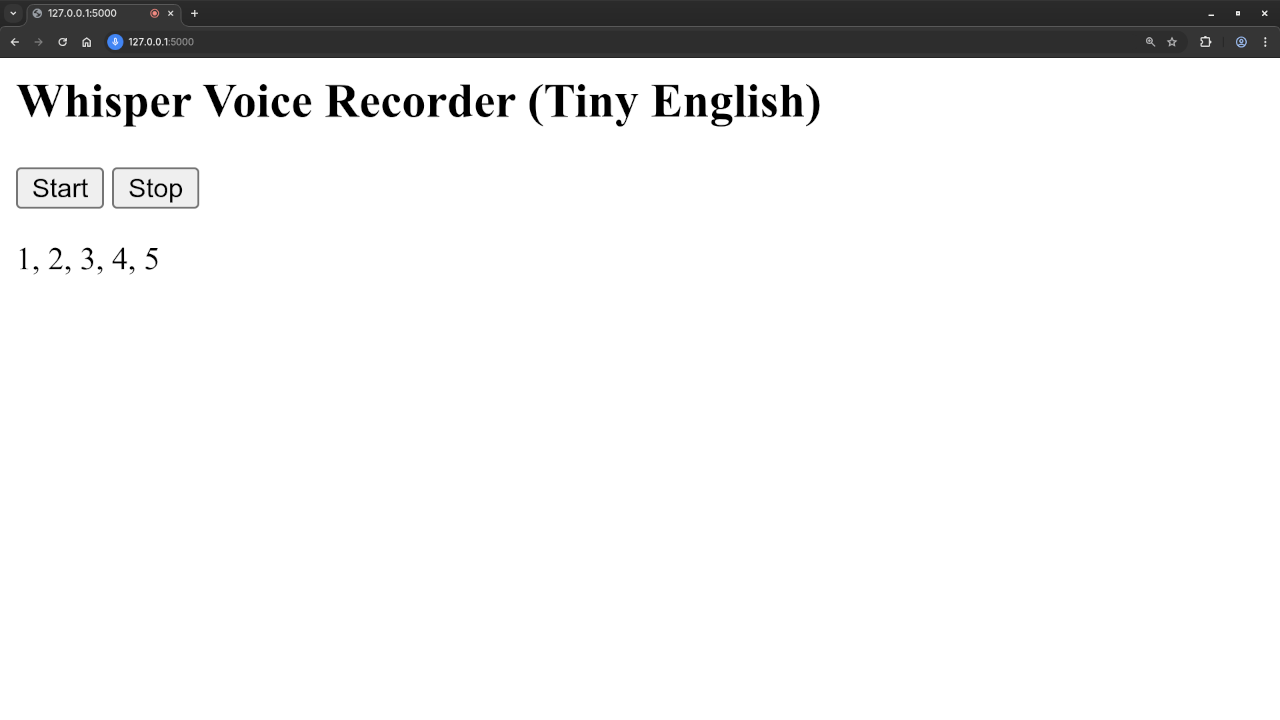

4. Create the HTML interface:

<h2>Whisper Voice Recorder (Tiny English)</h2>

<button onclick="startRecording()">Start</button>

<button onclick="stopRecording()">Stop</button>

<p id="transcription">Transcription will appear here...</p>

let mediaRecorder;

let audioChunks = [];

function startRecording() {

navigator.mediaDevices.getUserMedia({ audio: true }).then(stream => {

mediaRecorder = new MediaRecorder(stream);

mediaRecorder.start();

mediaRecorder.ondataavailable = event => {

audioChunks.push(event.data);

};

mediaRecorder.onstop = () => {

const audioBlob = new Blob(audioChunks, { type: 'audio/mp3' });

const formData = new FormData();

formData.append("audio", audioBlob, "recording.mp3");

fetch("/transcribe", {

method: "POST",

body: formData

})

.then(res => res.json())

.then(data => {

document.getElementById("transcription").innerText = data.text;

});

audioChunks = [];

};

});

}

function stopRecording() {

mediaRecorder.stop();

}

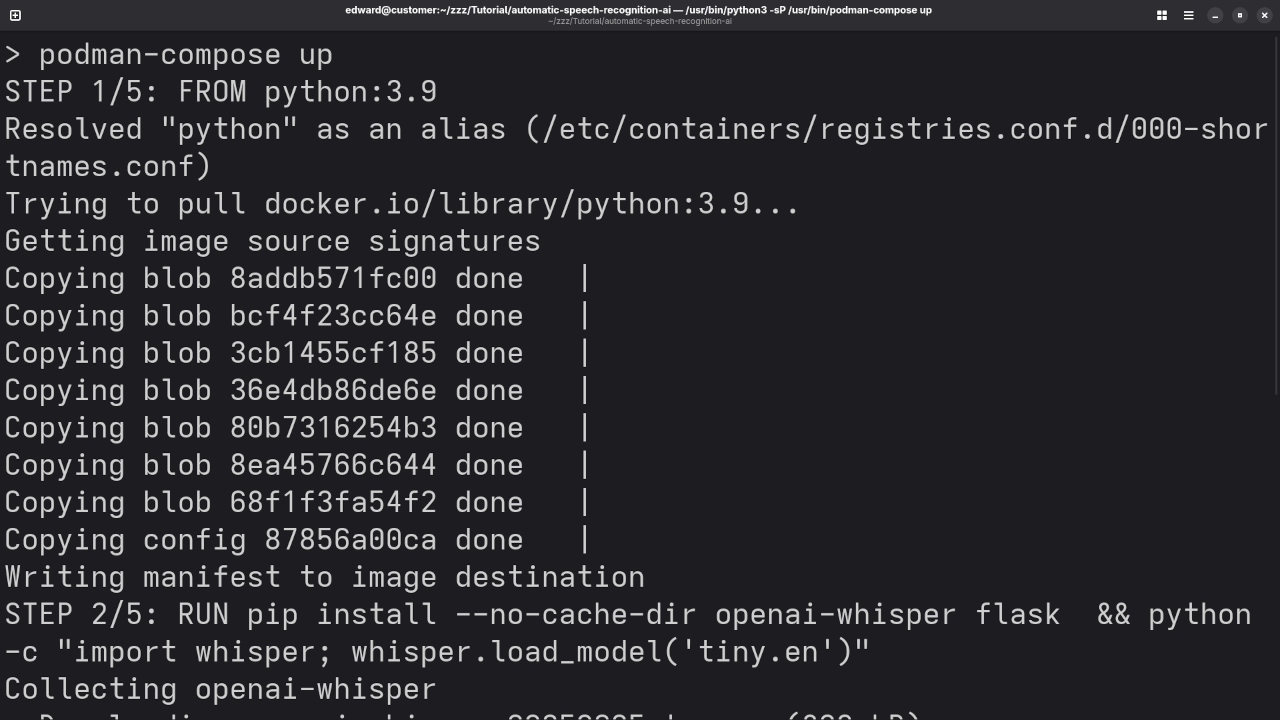

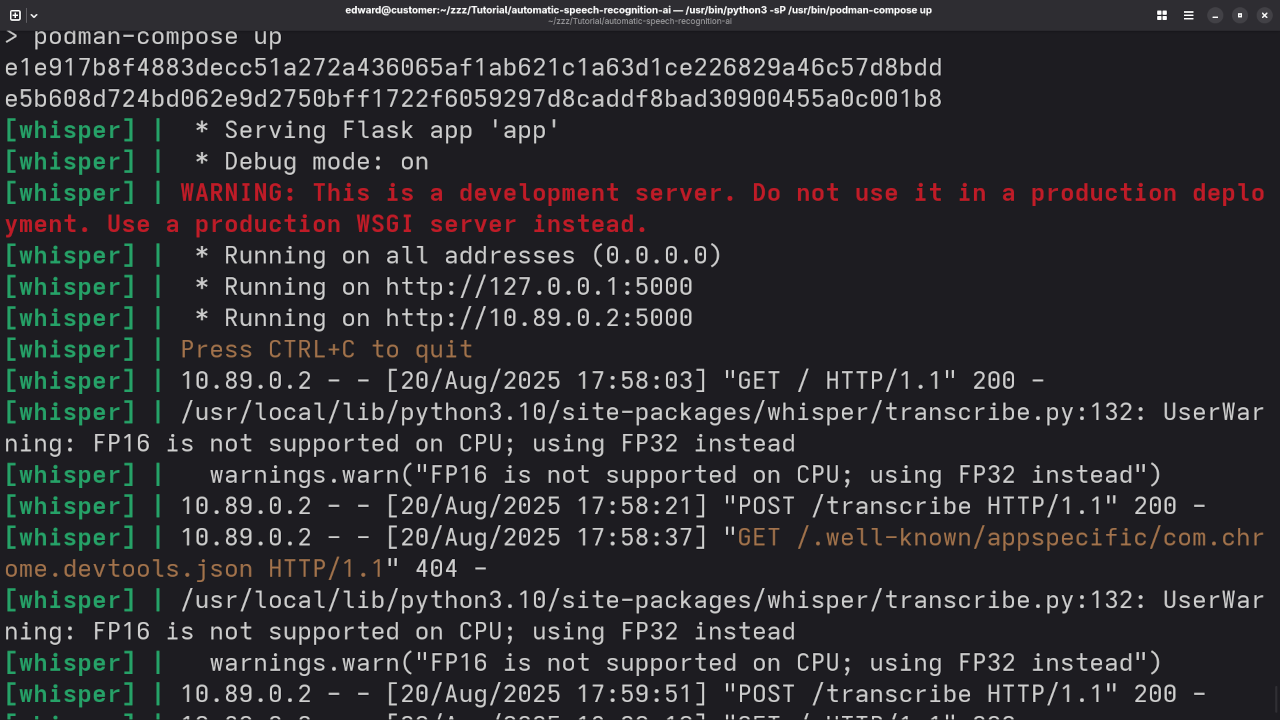

5. Run it:

podman-compose upVisit http://localhost:5000 in your browser to test audio recording and transcription.

📷 Screenshots & Screencast

📈 Before vs After Training

🔴 Before Custom Training:

- Good with general English

- Less accurate with accents or technical vocabulary

🟢 After Fine-Tuning:

- Improved accuracy for domain-specific terms

- Better noise tolerance

- Custom language and phrase detection

📚 Related Learning Resources

- Book: Learning Python

- Course: Learning Python Course

👨🎓 Need Help?

🚀 Recommended Resources

Disclosure: Some of the links above are referral links. I may earn a commission if you make a purchase at no extra cost to you.